Istio Service Mesh

What is Service Mesh?

A service mesh is an infrastructure layer that manages how services communicate with each other. It makes communication manageable, visible, and controlled.

The Problem It Solves

Old Way (Without Service Mesh)

- Each service has its own communication logic (retries, timeouts, routing)

- Developers write this logic inside each service

- Problems:

- Hard to manage when you have many services

- If using different languages, need to rewrite same logic

- Can't update third-party services easily

- Configuration becomes messy

Service Mesh Way

- Extracts communication logic from services

- Puts it in a separate layer using network proxies

- Proxies handle all communication

- Control plane manages all proxies

Deployment Models

Deployment Model Comparison

| Model | Architecture | Pros | Cons | Istio Support |

|---|---|---|---|---|

| Sidecar | Proxy per pod | Strong isolation | Higher resource usage | ✅ Production-ready |

| Ambient | Node-level proxy (L4) + optional waypoint (L7) | Lower resource usage | Less isolation | ✅ Production-ready |

| Cilium Mesh | eBPF kernel-level (L4) + L7 when needed | Very efficient, secure | More complex | ❌ Not supported |

| gRPC | Proxy built into app | Fastest (no extra hop) | Requires code changes | ❌ Not supported |

1. Sidecar Model

- Each service gets its own proxy container

- Pros: Strong isolation between services

- Cons: Uses more resources (CPU, memory)

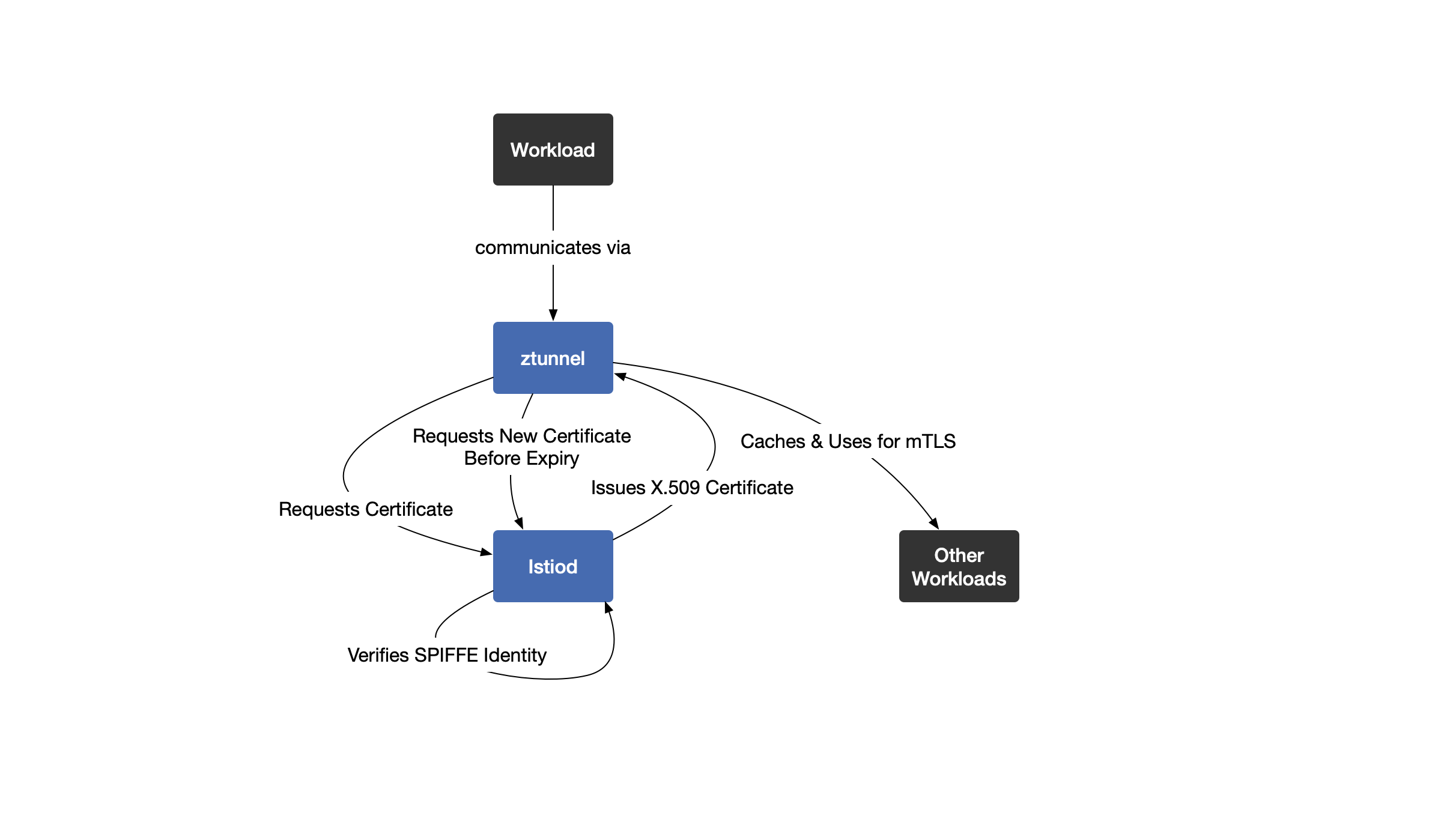

2. Ambient Mode

- ztunnel: Lightweight proxy per node for L4 traffic

- waypoint proxy: Optional L7 proxy at namespace or service level

- Pros: Lower resource consumption

- Cons: Less isolation than sidecar

3. Cilium Mesh Mode

- Uses eBPF (kernel-level networking) for L4 traffic

- Adds L7 proxy only when needed

- Pros: Very efficient and secure

- Cons: More complex setup

4. gRPC Mode

- Proxy built directly into the application

- Pros: Fastest performance (no extra network hop)

- Cons: Requires application code changes

Istio supports Sidecar and Ambient modes. Both are production-ready.

Why Use Service Mesh?

Security

- Automatic mTLS encryption between services

- Auto certificate rotation without manual intervention

- Zero-trust networking by default

Observability

- See all traffic, failures, and retries

- Detailed metrics for debugging

- Real-time monitoring of service health

Traffic Management

- Weighted routing (e.g., 90% old version, 10% new)

- Canary deployments for safe rollouts

- A/B testing capabilities

- Blue-green deployments

Resilience

- Automatic retries for failed requests

- Timeouts to prevent hanging requests

- Circuit breakers to stop calling broken services

- Rate limiting to prevent overload

Debugging

- Distributed tracing (Jaeger, Zipkin)

- See complete request path across services

- Identify bottlenecks and latency issues

Testing

- Fault injection for chaos engineering

- Test how system handles failures

- Simulate network delays and errors

Service mesh lets infrastructure handle communication, so developers can focus on business logic.

Introducing Istio

What is Istio?

Istio is an open-source service mesh implementation that provides three core capabilities:

1. Traffic Management

- Control traffic flow between services using configuration files

- Set circuit breakers, timeouts, retries with simple config

- No code changes needed

2. Observability

- Tracing, monitoring, and logging built-in

- See what's happening in your services

- Find and fix issues quickly

3. Security

- Authentication, authorization, and encryption at proxy level

- Change policies with configuration (no code changes)

- Enforce rules across all services

Istio Architecture

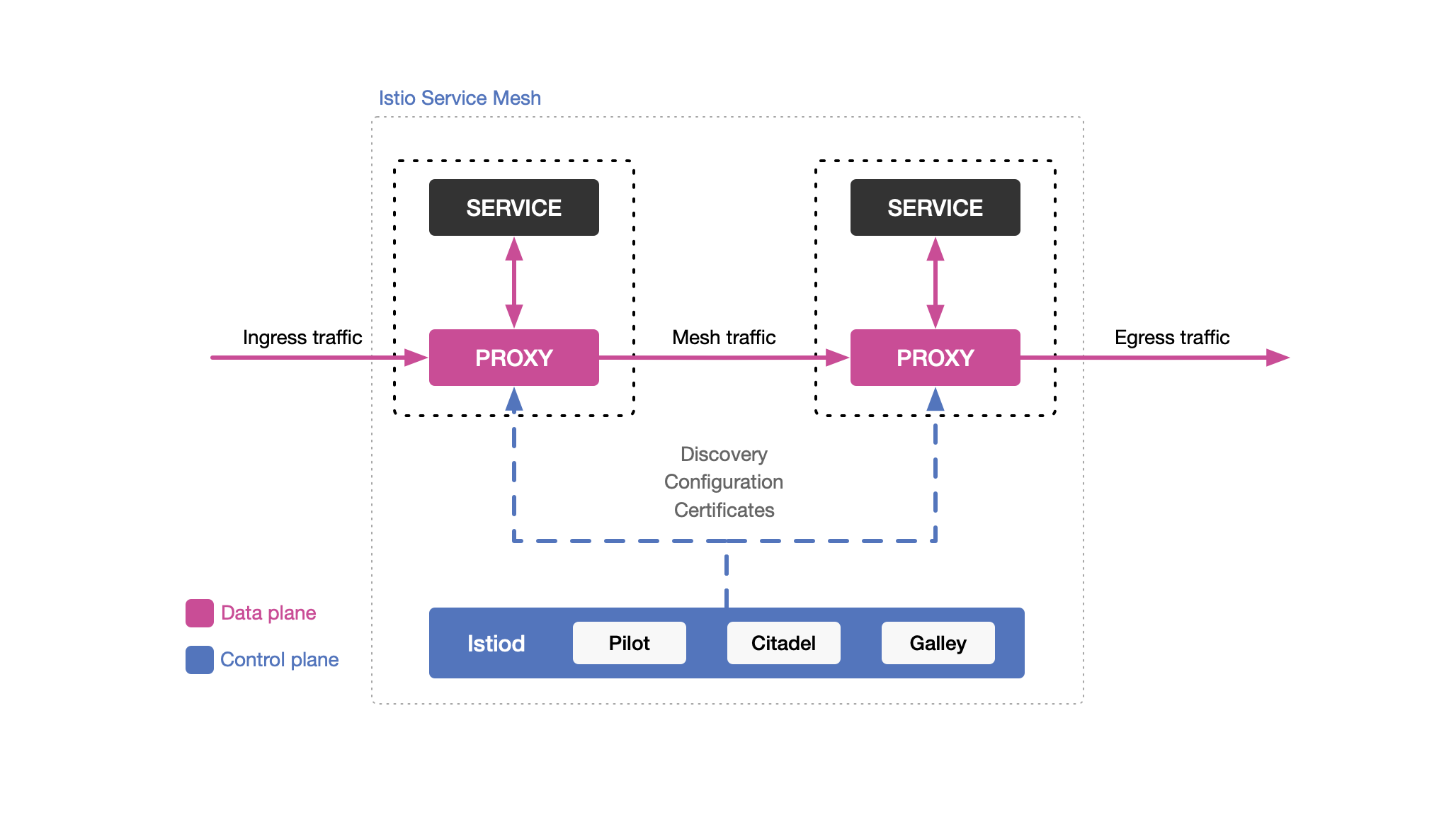

Istio consists of two main components:

| Component | Function | On Request Path | Technology |

|---|---|---|---|

| Data Plane | Handles actual traffic | ✅ Yes | Envoy proxies |

| Control Plane | Manages and configures data plane | ❌ No | Istiod |

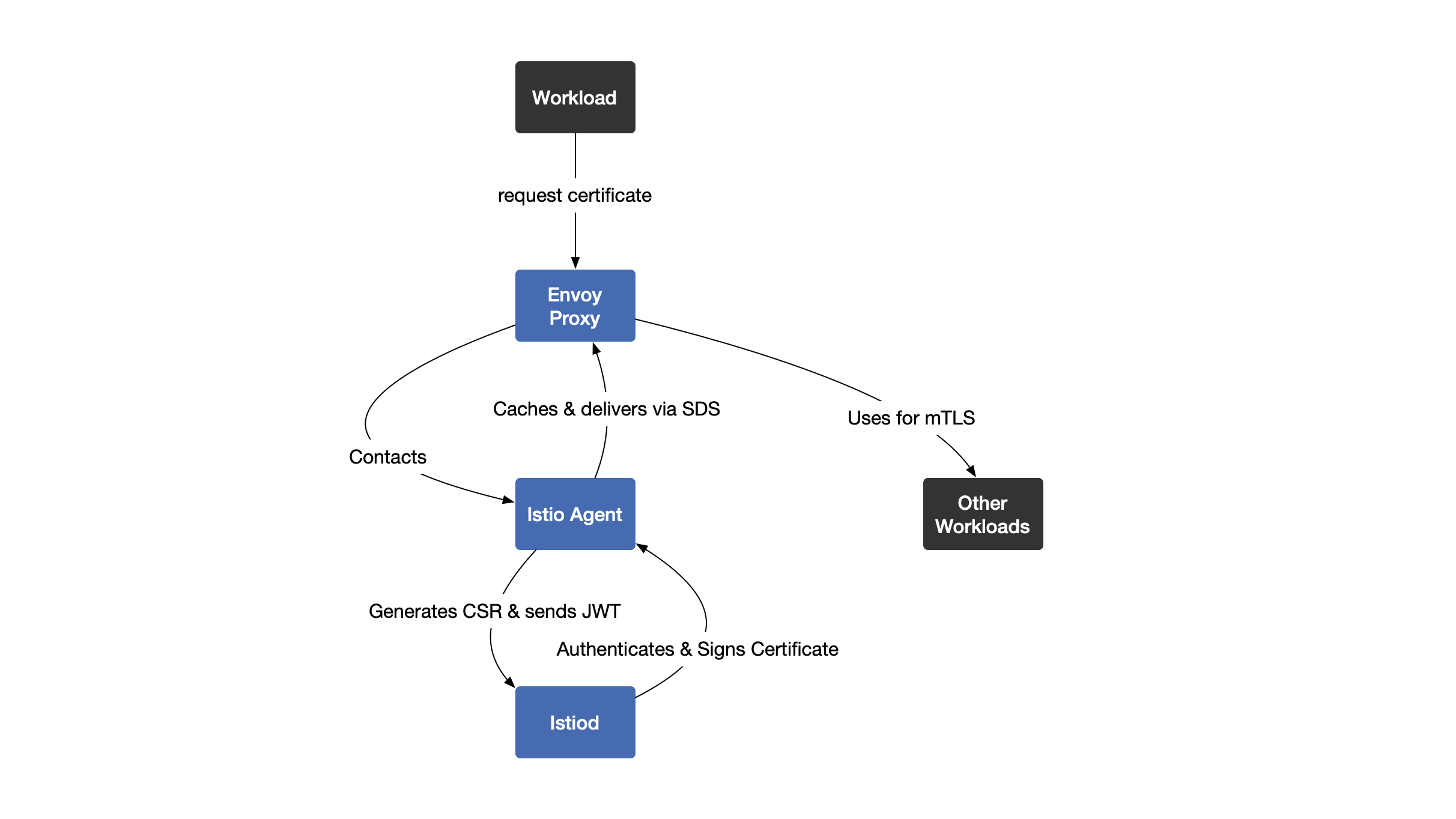

Data Plane

- Handles actual traffic between services

- On the request path (affects latency)

- Uses Envoy proxies

Control Plane

- Manages and configures data plane

- Not on request path (no latency impact)

- Uses Istiod (single binary)

Data Plane: Two Modes

Sidecar Mode

- Envoy proxy runs next to each app container

- Intercepts all traffic in and out

- Can be automatic (webhook) or manual injection

- All proxies together form the data plane

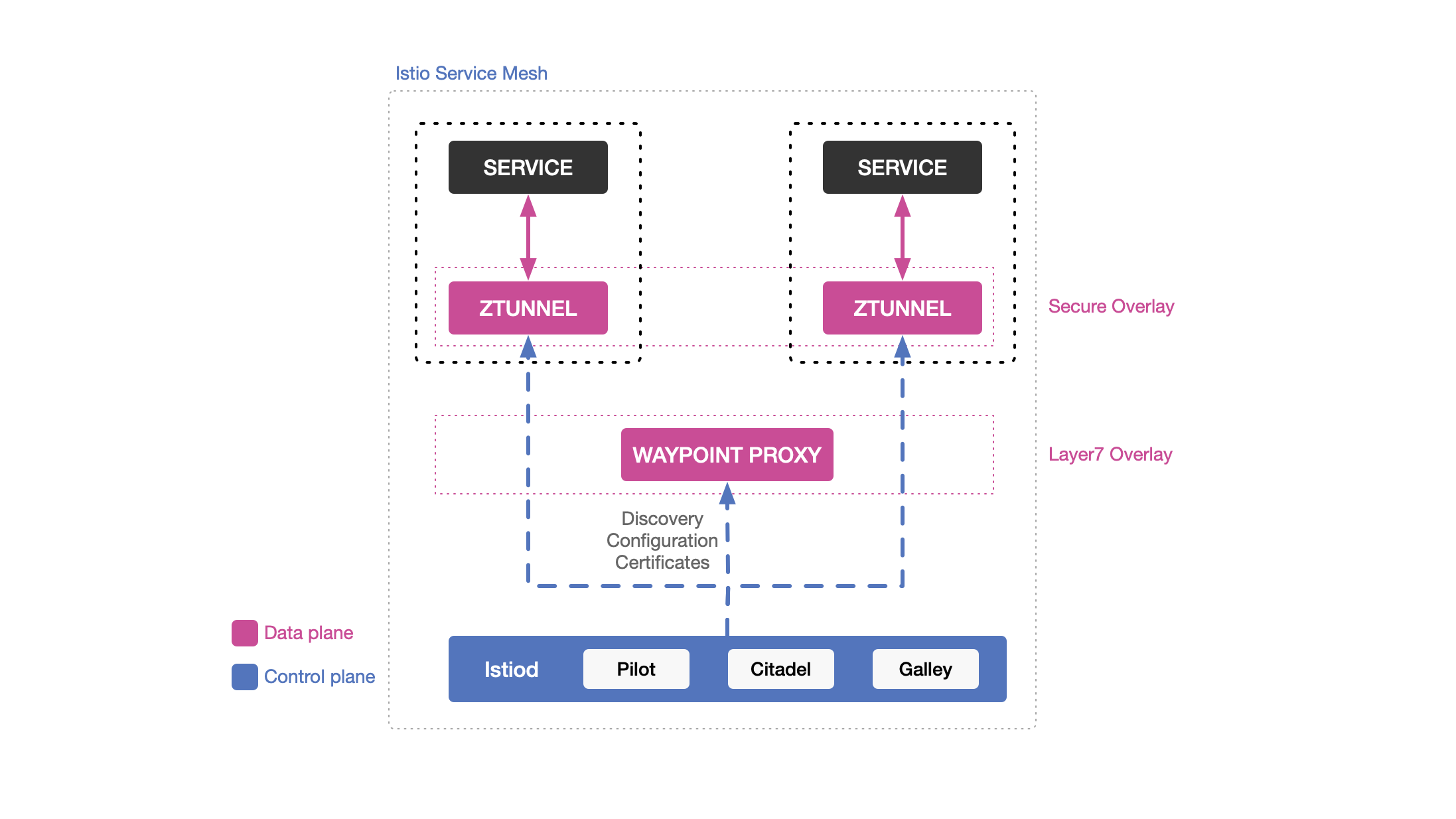

Ambient Mode (Production-Ready)

- No sidecar needed

- ztunnel: L4 proxy per node (basic traffic)

- waypoint proxy: L7 proxy when needed (advanced traffic)

- Uses fewer resources

- Now production-ready (GA)

| Feature | Sidecar Mode | Ambient Mode |

|---|---|---|

| Proxy Location | Per pod | Per node (ztunnel) |

| Resource Usage | Higher | Lower |

| L4 Traffic | Sidecar | ztunnel |

| L7 Traffic | Sidecar | waypoint proxy |

| Injection Required | ✅ Yes | ❌ No |

Key Components

Envoy (Data Plane)

High-performance proxy written in C++

Capabilities:

- Load balancing

- Circuit breaking

- Fault injection

- Traffic routing

- Observability metrics

WebAssembly Support: Extend functionality with custom Wasm plugins

Deployment:

- Sidecar mode: Runs as sidecar container

- Ambient mode: Runs as waypoint proxy

Istiod (Control Plane)

Istiod performs four main functions:

1. Service Discovery

- Finds all services (Kubernetes or VMs)

- Converts to standard format

- Maintains service registry

2. Configuration Management

- Takes your YAML rules

- Converts to Envoy configuration

- Distributes config to all proxies

3. Certificate Management

- Acts as certificate authority (CA)

- Generates and rotates certificates automatically

- Enables mTLS between proxies

4. Security

- Service-to-service authentication

- End-user authentication

- Authorization policies (who can access what)

How Istio Works

1. Write YAML configuration

↓

2. Istiod reads configuration

↓

3. Converts to Envoy/ztunnel config

↓

4. Distributes to all proxies

↓

5. Proxies handle traffic based on config

Istio = Control plane (Istiod) + Data plane (Envoy proxies)

- Sidecar mode: Proxy per container

- Ambient mode: Shared proxies (lower overhead)

Installing Istio - Components & Profiles

Gateway Components

Ingress Gateway

- Handles traffic coming INTO the mesh

- Envoy proxy for inbound traffic

- Supports HTTP/TCP routing

- Works with Kubernetes Gateway API

- Installed by default in

defaultprofile

Egress Gateway

- Handles traffic going OUT of the mesh

- Optional (not installed by default)

- Must be deployed manually if needed

- Useful for controlling outbound traffic

Configuration Profiles

Pre-configured setups for different use cases:

1. default (Production)

- Use for: Production environments

- Installs: istiod + Ingress Gateway

- Best for: Real deployments

2. minimal

- Use for: Custom setups

- Installs: Only istiod (no gateways)

- Best for: When you manage gateways separately

3. demo

- Use for: Learning and testing

- Installs: istiod + Ingress + Egress Gateway

Includes extra tracing/telemetry. Do not use for performance tests or production.

4. empty

- Use for: Full customization

- Installs: Nothing

- Best for: When you want total control

5. preview

- Use for: Testing new features

- Installs: Experimental features

- Best for: Early adopters

6. remote

- Use for: Multi-cluster setup

- Installs: Remote cluster components

- Best for: Connecting to central control plane

7. ambient

- Use for: Ambient mesh deployments

- Installs: istiod + ztunnel + CNI

Need extra configuration for waypoint proxies to enable L7 features.

Profile Comparison Table

| Profile | istiod | Ingress | Egress | ztunnel | Use Case |

|---|---|---|---|---|---|

| default | ✅ | ✅ | ❌ | ❌ | Production |

| minimal | ✅ | ❌ | ❌ | ❌ | Custom setup |

| demo | ✅ | ✅ | ✅ | ❌ | Learning |

| empty | ❌ | ❌ | ❌ | ❌ | Full custom |

| preview | ✅ | ✅ | ❌ | ❌ | Experimental |

| remote | ❌ | ❌ | ❌ | ❌ | Multi-cluster |

| ambient | ✅ | ❌ | ❌ | ✅ | Ambient mode |

- Production: Use

defaultprofile - Learning: Use

demoprofile - Ambient mode: Use

ambientprofile - Components can be installed together or separately based on needs

Installing Istio with Helm

What is Helm?

Helm is a package manager for Kubernetes that makes installing applications easier and repeatable.

Why Use Helm for Istio?

- Customization: Control exactly what you install

- Repeatability: Same setup every time

- Production Ready: Easy to upgrade later

- Version Control: Track changes to your configuration

Installation Steps (Sidecar Mode)

1. Add Istio Helm Repository

helm repo add istio https://istio-release.storage.googleapis.com/charts

helm repo update

2. Create Namespace

kubectl create namespace istio-system

3. Install Base Chart

helm install istio-base istio/base -n istio-system

This installs CRDs (Custom Resource Definitions) - tells Kubernetes about Istio resources.

4. Install Istiod (Control Plane)

helm install istiod istio/istiod -n istio-system --set profile=demo

Profile Options:

default: For productiondemo: For learning/testing (includes extra monitoring)

5. Enable Auto-Injection

kubectl label namespace default istio-injection=enabled

All new pods in default namespace will automatically get sidecar injected.

6. Install Ingress Gateway (Optional)

helm install istio-ingressgateway istio/gateway -n istio-system

Only needed if you want to expose services outside the cluster.

7. Verify Installation

kubectl get pods -n istio-system

Check that istiod and istio-ingressgateway (if installed) are Running.

Uninstall Istio

helm uninstall istio-ingressgateway -n istio-system

helm uninstall istiod -n istio-system

helm uninstall istio-base -n istio-system

kubectl delete namespace istio-system

Installing Istio with istioctl

What is istioctl?

istioctl is the command-line tool to install and manage Istio. This is the community-recommended installation method.

Two Installation Modes

| Mode | Description | Proxy Location |

|---|---|---|

| Sidecar | Each pod gets Envoy proxy injected | Per pod |

| Ambient | No sidecars, uses node-level proxies | Per node |

Installing Sidecar Mode

Installation Steps

# 1. Install Istio (demo profile for learning)

istioctl install --set profile=demo --skip-confirmation

# 2. Enable auto-injection

kubectl label namespace default istio-injection=enabled

# 3. Verify installation

kubectl get pods -n istio-system

You should see istiod and istio-ingressgateway Running.

Use default profile for production, not demo.

Installing Ambient Mode

What's Different?

- No sidecar per pod

- ztunnel: L4 proxy (one per node)

- waypoint proxy: L7 proxy (optional, when needed)

Platform Prerequisites

| Platform | Requirements | Notes |

|---|---|---|

| GKE | ResourceQuota for istio-system | Required |

| AKS | Network policies enabled | Works out of box |

| EKS | Calico CNI recommended | Works |

| Minikube/Kind | Check CNI compatibility | May need configuration |

Installation Steps

# 1. Install Gateway API CRDs

kubectl get crd gateways.gateway.networking.k8s.io &> /dev/null || \

{ kubectl apply -f https://github.com/kubernetes-sigs/gateway-api/releases/download/v1.2.1/standard-install.yaml; }

# 2. Install Istio (ambient profile)

istioctl install --set profile=ambient --skip-confirmation

# 3. Enable ambient mode for namespace

kubectl label namespace default istio.io/dataplane-mode=ambient

# 4. Deploy waypoint proxy (optional, for L7 features)

istioctl waypoint apply -n default --service-account default

# Or for entire namespace

istioctl waypoint apply -n default --enroll-namespace

# 5. Verify waypoint

kubectl get waypoint -n default

# 6. Verify installation

kubectl get pods -n istio-system

You should see istiod and ztunnel Running.

Canary Upgrades (Production)

What is Canary Upgrade?

Install a new Istio version alongside the old one and migrate workloads gradually.

Using Revisions

# 1. Install new version with revision tag

istioctl install --set revision=1-24-3 --set profile=default --skip-confirmation

# 2. Label namespace to use new revision

kubectl label namespace default istio.io/rev=1-24-3 --overwrite

# 3. Restart pods to pick up new version

kubectl rollout restart deployment -n default

Using Revision Tags (Recommended)

# 1. Create tag for revision

istioctl tag set prod-stable --revision=1-24-3

# 2. Label namespace with tag

kubectl label namespace default istio.io/rev=prod-stable --overwrite

- Test new version in specific namespaces

- Easy rollback if problems occur

- Gradual migration path

- Better version management

Remove Old Version

istioctl uninstall --revision=<old-revision>

Sidecar vs Ambient Mode Comparison

| Feature | Sidecar Mode | Ambient Mode |

|---|---|---|

| Proxy per pod | ✅ Yes | ❌ No |

| Resource usage | Higher | Lower |

| L4 traffic | Sidecar | ztunnel |

| L7 traffic | Sidecar | waypoint proxy |

| Injection needed | ✅ Yes | ❌ No |

| Label | istio-injection=enabled | istio.io/dataplane-mode=ambient |

- istioctl = Main tool to install Istio

- Sidecar = Traditional approach (proxy per pod)

- Ambient = Modern approach (shared proxies, lower overhead)

- Revisions = Safe way to upgrade in production

Lab 1: Installing Istio Sidecar Mode

Prerequisites

Kubernetes Cluster Options

| Option | Type | Recommended For |

|---|---|---|

| Minikube | Local | Learning, testing |

| Docker Desktop | Local | Mac/Windows users |

| kind | Local | CI/CD pipelines |

| MicroK8s | Local | Ubuntu users |

| Cloud Provider | Remote | Production-like testing |

Minimum Requirements

- RAM: 8GB

- CPUs: 4

- Kubernetes: v1.28.0 or higher

Required Tools

kubectl(Kubernetes CLI)istioctl(Istio CLI)

Minikube Setup

minikube start --driver=docker --cpus=4 --memory=8192 --disk-size=40g

minikube addons enable ingress

minikube status

Installation Steps

1. Download Istio

curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.24.3 sh -

cd istio-1.24.3

export PATH=$PWD/bin:$PATH

mv $PWD/bin/istioctl /usr/local/bin/

2. Verify istioctl

istioctl version

Should show: 1.24.3

3. Install Istio

istioctl install --set profile=demo --skip-confirmation

Profile Options:

demo: For learning (includes ingress + egress gateway)default: For production

4. Check Installation

kubectl get pods -n istio-system

You should see:

istiod- Runningistio-ingressgateway- Runningistio-egressgateway- Running

5. Enable Sidecar Auto-Injection

kubectl label namespace default istio-injection=enabled

All new pods in default namespace will automatically get sidecar injected.

6. Verify Label

kubectl get namespace -L istio-injection

Testing with Bookinfo App

Deploy Sample App

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/bookinfo/platform/kube/bookinfo.yaml

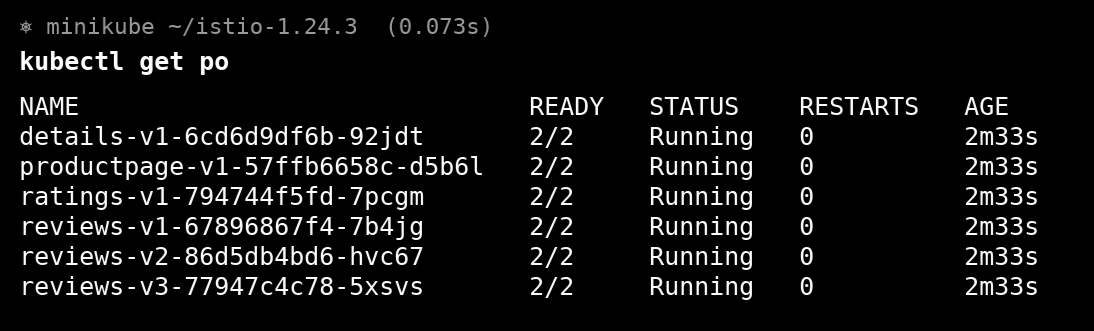

Check Pods

kubectl get pods

Each pod should show 2/2 READY (app + sidecar)

Example Output:

NAME READY STATUS

productpage-v1-xxx 2/2 Running

reviews-v1-xxx 2/2 Running

Setup Ingress Gateway

kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml

Access Application (Minikube)

minikube tunnel

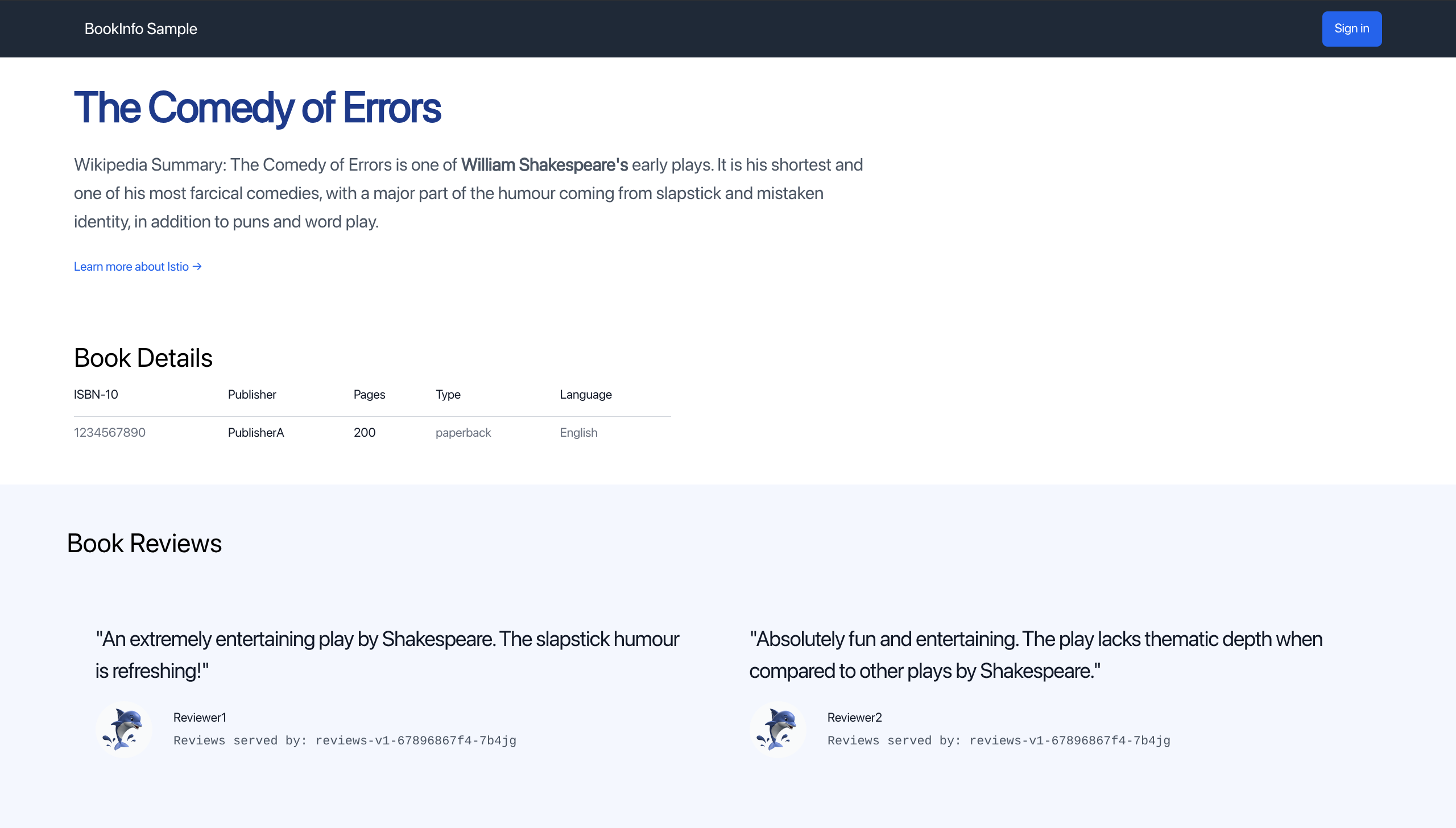

Then open browser: http://externalIP/productpage

What You'll See

- Bookinfo application page

- Refresh multiple times

- Reviews section changes (red stars, black stars, no stars)

- This demonstrates traffic routing to different versions of

reviewsservice

Uninstall

istioctl uninstall --purge

kubectl delete namespace istio-system

Sidecar mode = Each pod gets 2 containers (app + Envoy proxy). The 2/2 READY count proves sidecar is injected.

Lab 2: Installing Istio Ambient Mode

What is Ambient Mode?

Ambient mode is a lightweight service mesh without sidecar proxies, resulting in lower resource usage.

Prerequisites

Same as Lab 1:

- Kubernetes cluster (Minikube, Docker Desktop, Kind, MicroK8s, or cloud)

- 16GB RAM, 4 CPUs

- Kubernetes v1.28.0 or higher

kubectlandistioctlinstalled

Installation Steps

1. Install Istio (Ambient Profile)

istioctl install --set profile=ambient --skip-confirmation

2. Check Installation

kubectl get pods -n istio-system

You should see:

istiod- Runningztunnel-xxx- Running (one per node)

No ingress/egress gateway in ambient profile by default.

3. Enable Ambient Mode for Namespace

kubectl label namespace default istio.io/dataplane-mode=ambient

4. Verify Label

kubectl get namespace -L istio.io/dataplane-mode

Testing with Bookinfo App

Deploy Sample App

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/bookinfo/platform/kube/bookinfo.yaml

Check Pods

kubectl get pods

Each pod should show 1/1 READY (just app, no sidecar!)

Example Output:

NAME READY STATUS

productpage-v1-xxx 1/1 Running

reviews-v1-xxx 1/1 Running

1/1 not 2/2 - no sidecar injected!

Deploy Waypoint Proxy (Optional - For L7 Features)

Install Gateway API CRDs

kubectl get crd gateways.gateway.networking.k8s.io &> /dev/null || \

{ kubectl apply -f https://github.com/kubernetes-sigs/gateway-api/releases/download/v1.2.1/standard-install.yaml; }

This command checks if Gateway API CRDs exist; if not, installs them.

Deploy Waypoint for Namespace

istioctl waypoint apply -n default --enroll-namespace

Check Waypoint

kubectl get pod

You should see waypoint proxy pod running.

Configure Ingress Access

Deploy Gateway

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/bookinfo/gateway-api/bookinfo-gateway.yaml

Access Application (Minikube)

minikube tunnel

Then open browser: http://localhost/productpage

What You'll See

- Bookinfo application page

- Refresh multiple times

- Reviews section changes (different versions)

- Traffic routing works without sidecars!

Uninstall

istioctl uninstall --purge

kubectl delete namespace istio-system

Sidecar vs Ambient Comparison

| Feature | Sidecar (Lab 1) | Ambient (Lab 2) |

|---|---|---|

| Pods READY count | 2/2 | 1/1 |

| Proxy location | Per pod | Per node (ztunnel) |

| Resource usage | Higher | Lower |

| L4 traffic | Sidecar | ztunnel |

| L7 traffic | Sidecar | waypoint proxy |

| Label | istio-injection=enabled | istio.io/dataplane-mode=ambient |

| Profile | demo | ambient |

Ambient mode = No sidecars. Pods show 1/1 READY. Uses ztunnel (L4) + optional waypoint (L7). Less overhead, same features.

Observability and Prometheus

What is Observability?

Observability is the ability to see what's happening inside your service mesh - like having eyes on your traffic.

How Istio Collects Data

| Mode | Collection Method | Layer |

|---|---|---|

| Sidecar | Envoy proxy intercepts traffic | L4 + L7 |

| Ambient | ztunnel (L4) + waypoint proxy (L7) | L4 and L7 |

Three Types of Telemetry

| Type | Description | Example |

|---|---|---|

| Metrics | Numbers and statistics | Requests, errors, latency |

| Distributed Traces | Request path across services | Full journey tracking |

| Access Logs | Access records | Who accessed what and when |

Metrics: The Four Golden Signals

1. Latency

How long requests take to complete

- Success latency (HTTP 200)

- Failure latency (HTTP 500)

2. Traffic

How many requests hit your system

- Requests per second

- Concurrent sessions

3. Errors

How many requests fail

- HTTP 500s count

- Error rate percentage

4. Saturation

How full your resources are

- CPU usage

- Memory usage

- Thread pool utilization

| Signal | Measures | Key Metrics |

|---|---|---|

| Latency | Response time | p50, p95, p99 |

| Traffic | Request volume | RPS, concurrent users |

| Errors | Failure rate | 4xx, 5xx counts |

| Saturation | Resource usage | CPU%, Memory% |

Three Levels of Metrics

1. Proxy-Level Metrics (Most Detailed)

Collection:

- Sidecar Mode: Envoy proxy collects data

- Ambient Mode: ztunnel and waypoint collect data

Example metrics:

envoy_cluster_internal_upstream_rq{response_code_class="2xx"} 7163

envoy_cluster_upstream_rq_completed{cluster_name="xds-grpc"} 7164

Access: /stats endpoint on Envoy proxy

2. Service-Level Metrics (Most Useful)

Tracks communication between services and covers all 4 golden signals.

Example:

istio_requests_total{

response_code="200",

source_workload="istio-ingressgateway",

destination_workload="web-frontend",

request_protocol="http"

} 9

Istio sends these to Prometheus automatically.

3. Control Plane Metrics

Monitors Istio itself (not your applications).

Examples:

- Conflicting listeners count

- Clusters without instances

- Rejected configurations

Metrics Level Comparison

| Level | Scope | Detail | Use Case |

|---|---|---|---|

| Proxy | Individual proxy | Highest | Deep debugging |

| Service | Service-to-service | Medium | Daily monitoring |

| Control Plane | Istio components | Low | Istio health |

Prometheus

What is Prometheus?

Prometheus is an open-source monitoring tool that stores and queries metrics - a time series database for numbers.

Install Prometheus

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/addons/prometheus.yaml

Open Dashboard

istioctl dashboard prometheus

Then open browser: http://localhost:9090

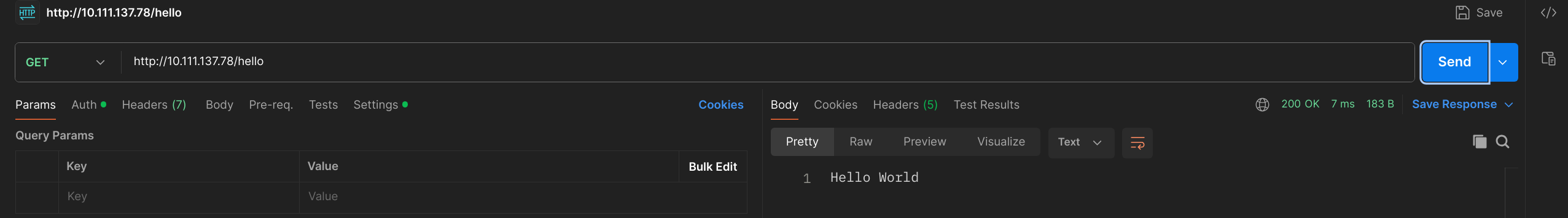

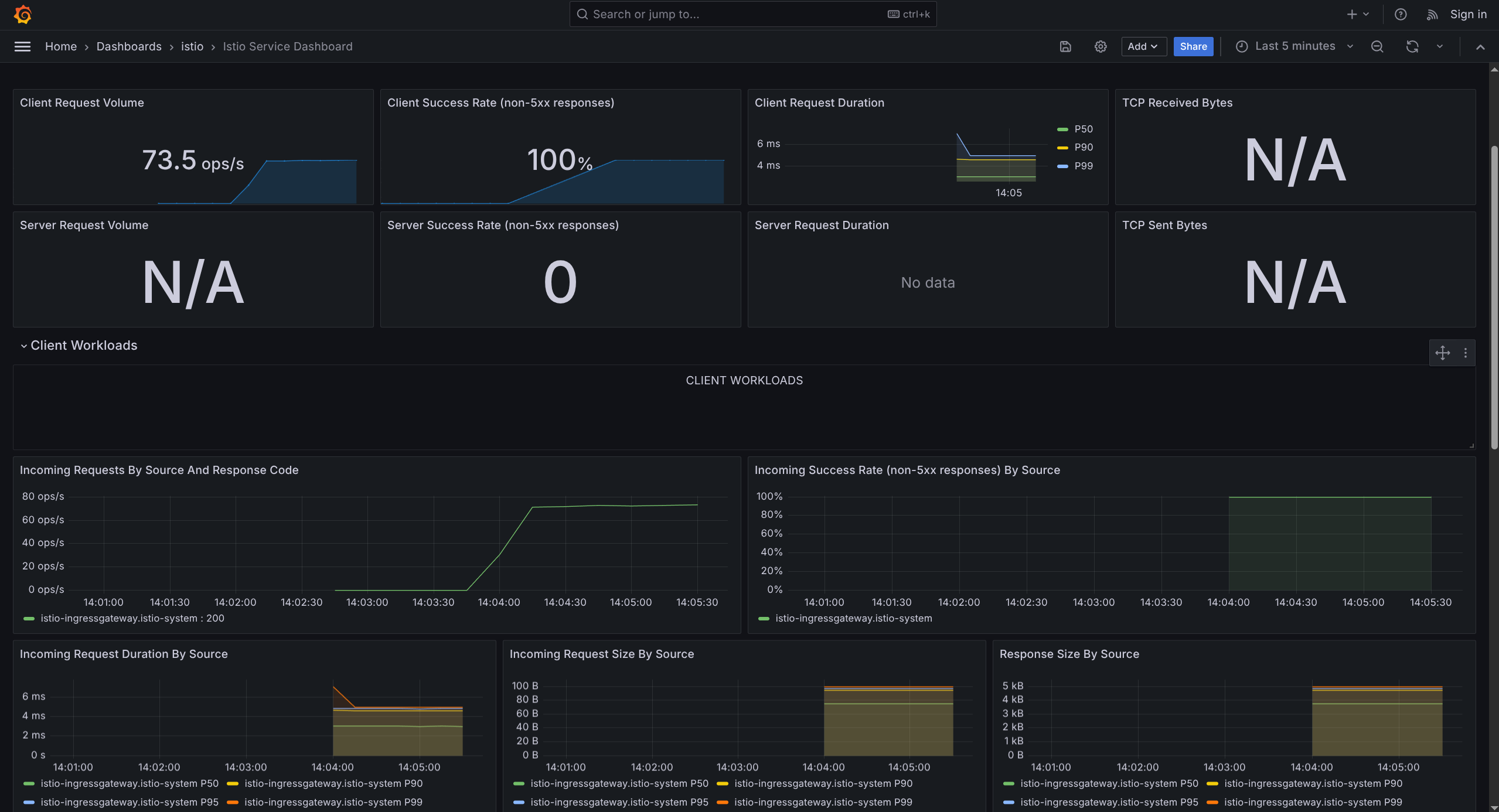

Testing Observability (Hands-on)

1. Deploy Sample Apps

# Deploy httpbin (receives requests)

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/httpbin/httpbin.yaml

# Deploy sleep (sends requests)

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/sleep/sleep.yaml

2. Check Pods

kubectl get pods -n default

| Mode | READY Count | Traffic Path |

|---|---|---|

| Sidecar | 2/2 | Through sidecar |

| Ambient | 1/1 | Through ztunnel |

3. Generate Traffic

# Single request

kubectl exec deploy/sleep -c sleep -- curl -sS http://httpbin:8000/get

# Multiple requests (20 times)

for i in {1..20}; do

kubectl exec deploy/sleep -c sleep -- curl -sS http://httpbin:8000/get

sleep 0.5

done

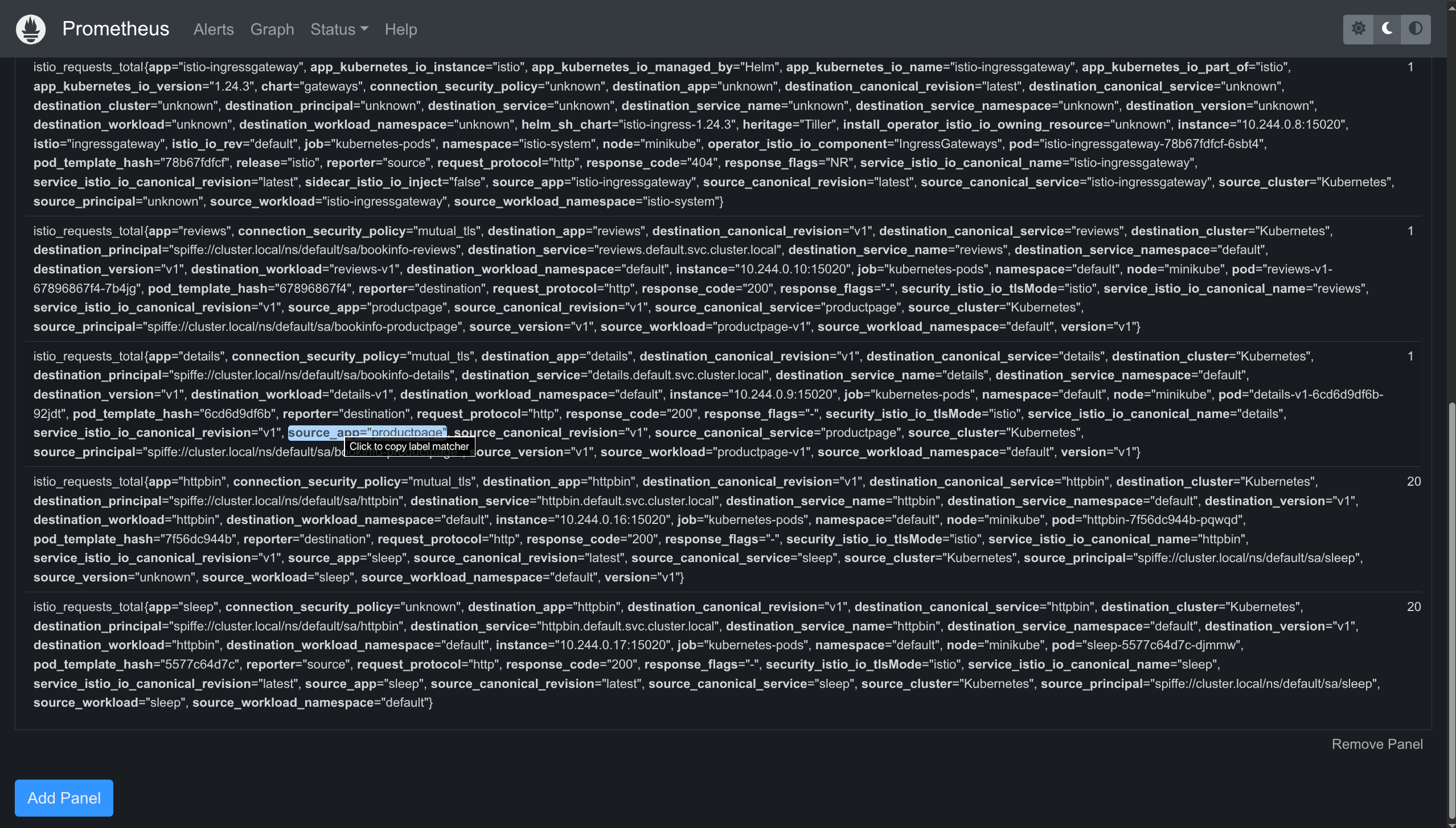

4. View Metrics in Prometheus

Open Prometheus dashboard and search for:

istio_requests_total

You'll see all requests from sleep to httpbin with details:

- Response codes

- Source/destination workloads

- Protocol used

- Connection security

Observability = Seeing what's happening in your mesh

Three types: Metrics (numbers), Traces (request path), Logs (access records)

Four golden signals: Latency, Traffic, Errors, Saturation

Prometheus = Tool to store and view metrics

Works in both modes: Sidecar (via Envoy) and Ambient (via ztunnel/waypoint)

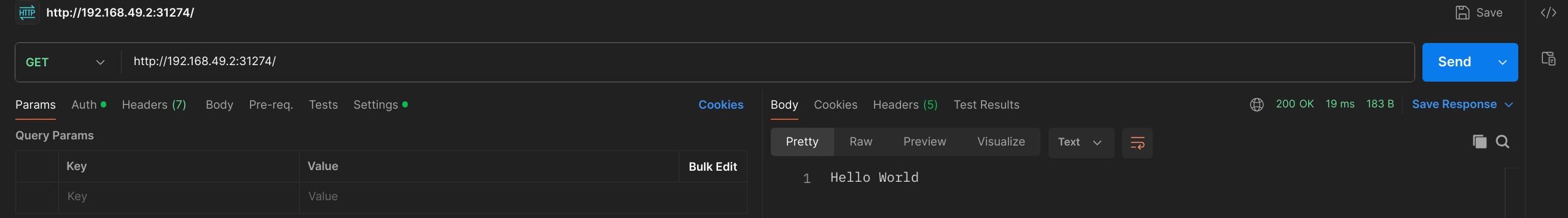

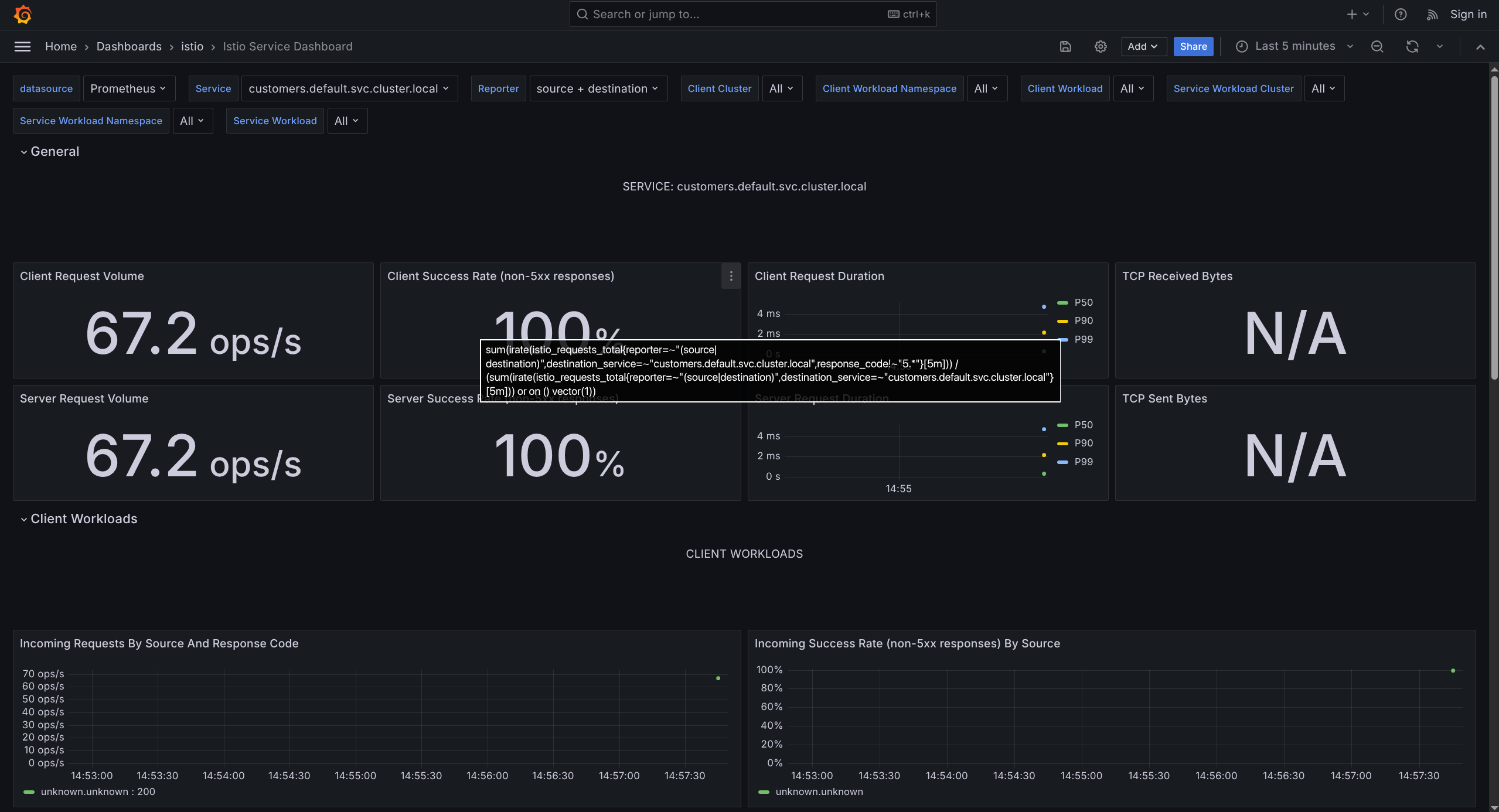

Grafana

What is Grafana?

Grafana is an open-source tool to visualize metrics. It takes data from Prometheus and displays it in graphs, tables, and charts.

Purpose: Monitor health of Istio and your applications in the mesh.

Prerequisites

Must install Prometheus first! Grafana uses Prometheus as its data source.

Install Grafana

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/addons/grafana.yaml

This is for learning only, NOT for production (not tuned for performance/security).

Open Grafana Dashboard

istioctl dashboard grafana

Then open browser: http://localhost:3000

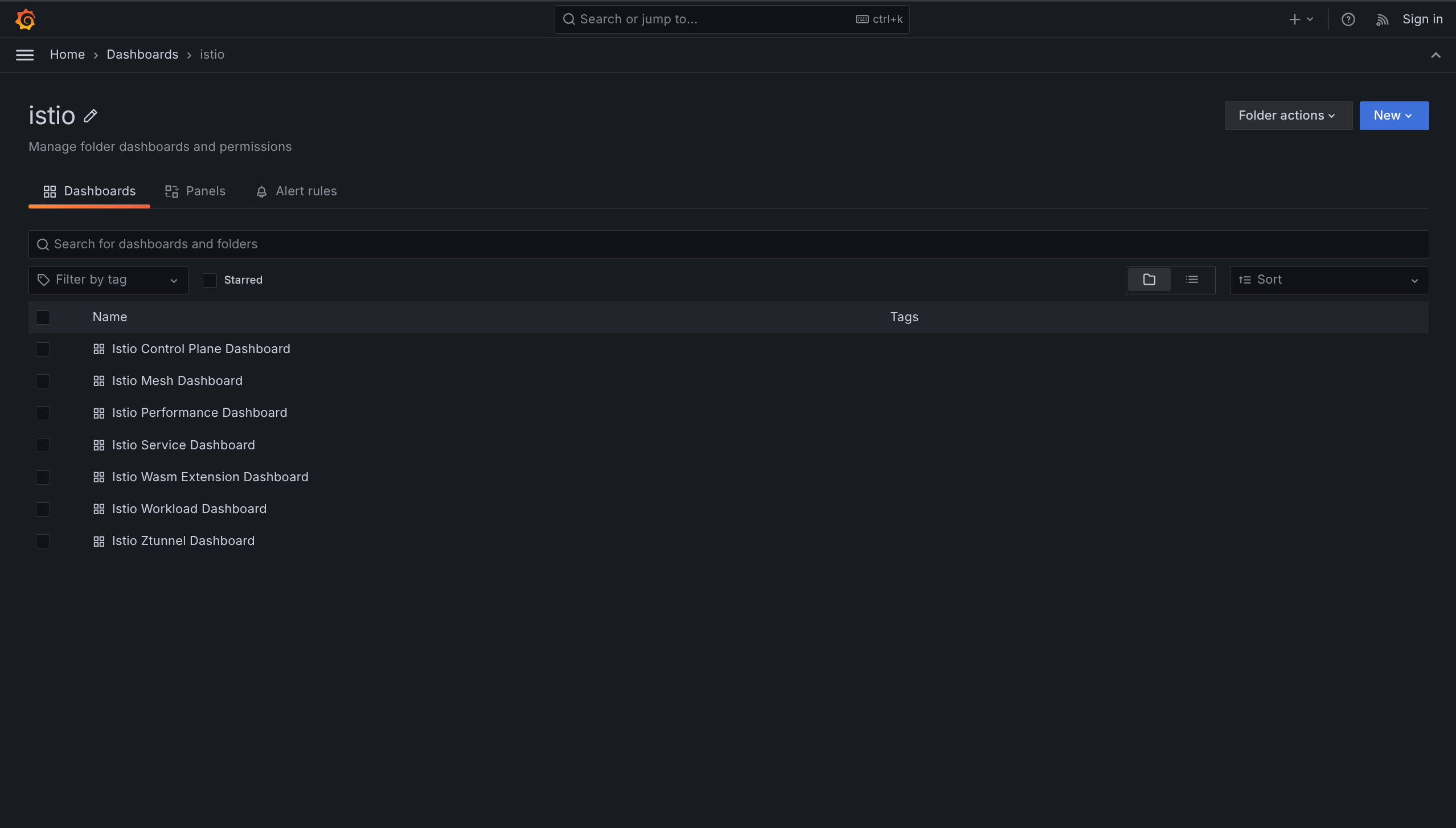

Navigate to istio folder to see all dashboards.

Pre-configured Dashboards (7 Total)

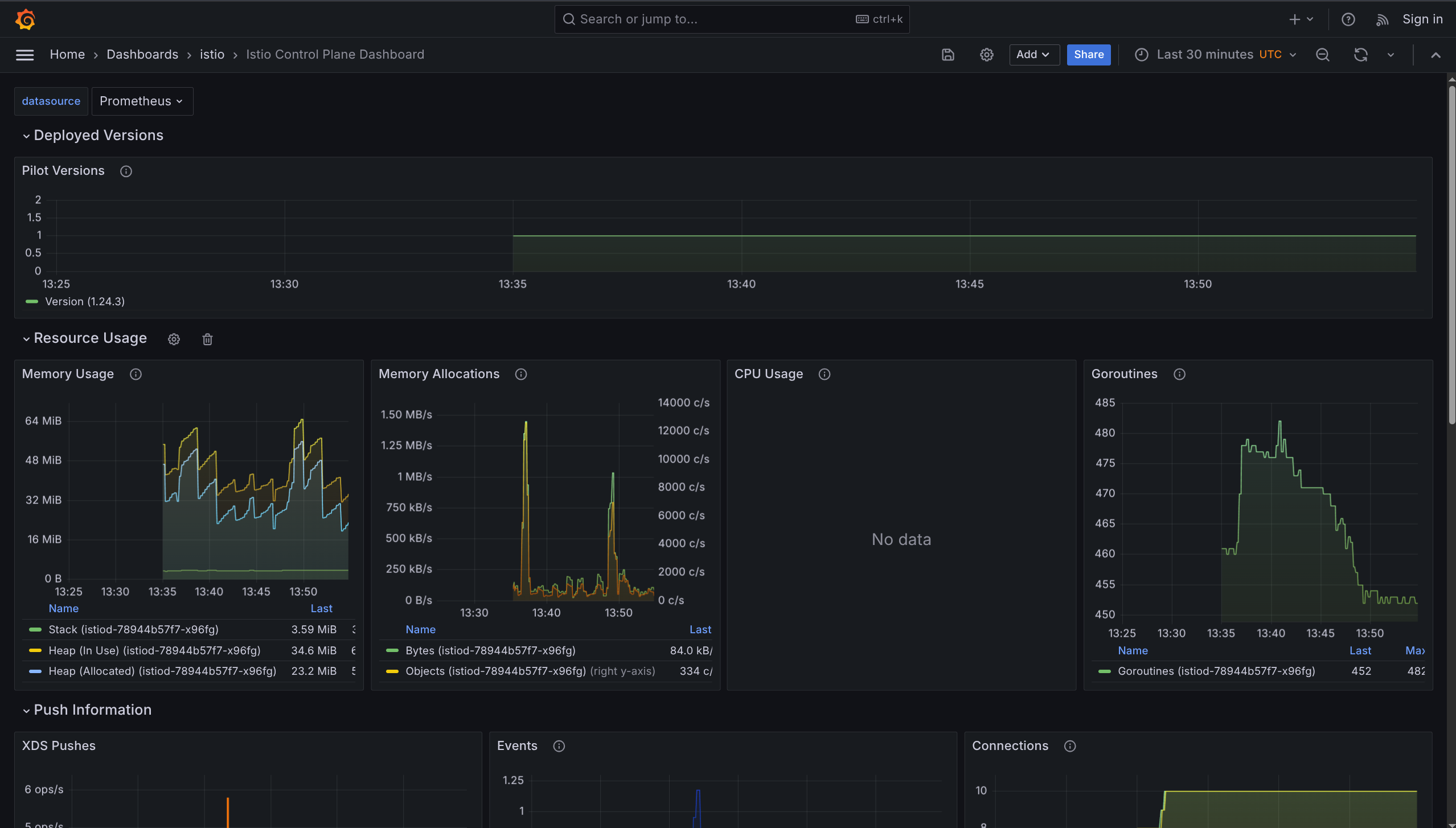

1. Istio Control Plane Dashboard

What it shows: Health of Istio control plane itself

Monitors:

- CPU, memory, disk usage

- Pilot metrics

- Envoy statistics

- Webhook status

Use when: Checking if Istio control plane is healthy

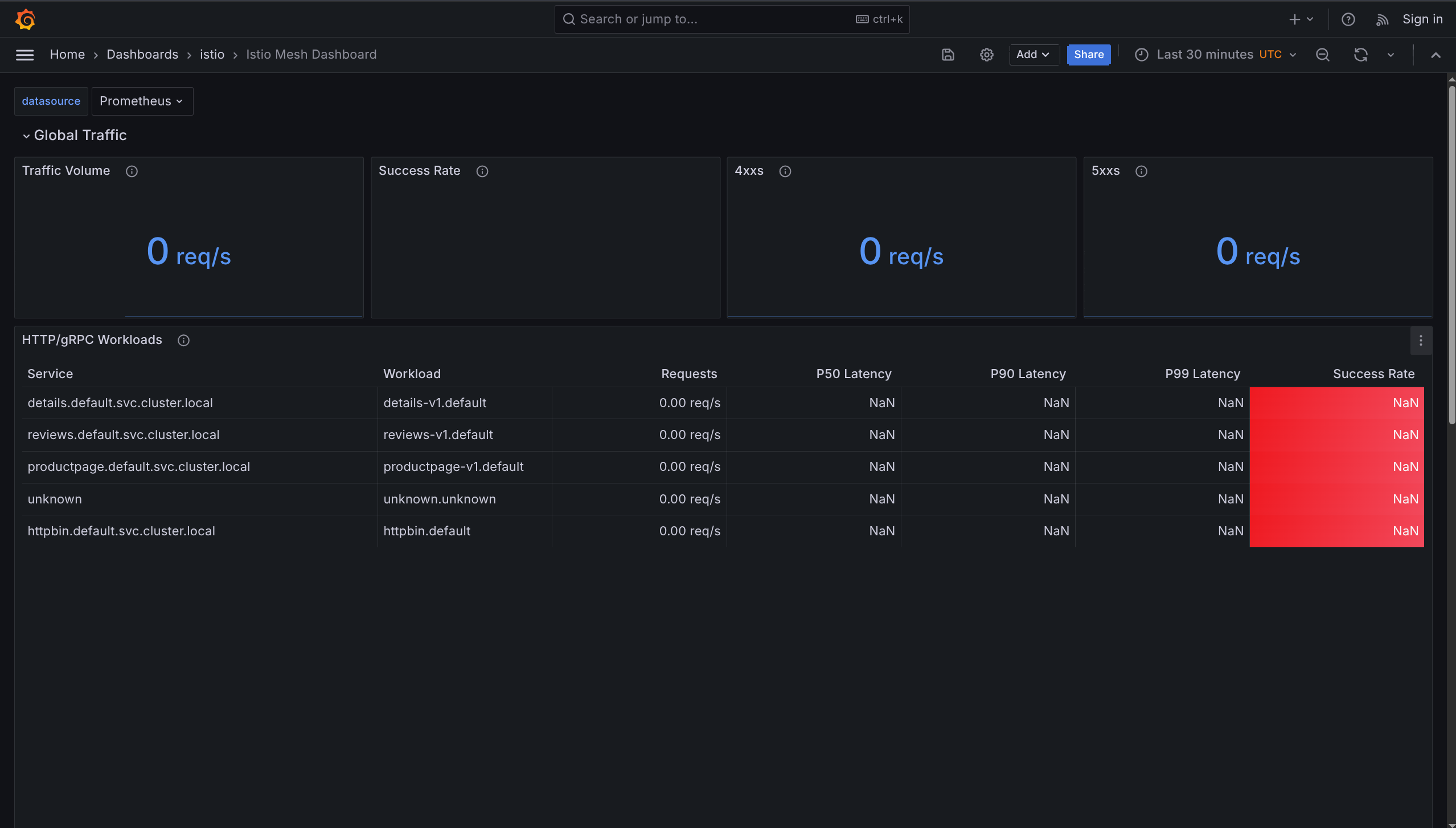

2. Istio Mesh Dashboard

What it shows: Overview of ALL services in mesh

Monitors:

- Global request volume

- Success rate

- HTTP 4xx errors (client errors)

- HTTP 5xx errors (server errors)

Use when: Getting big picture of entire mesh health

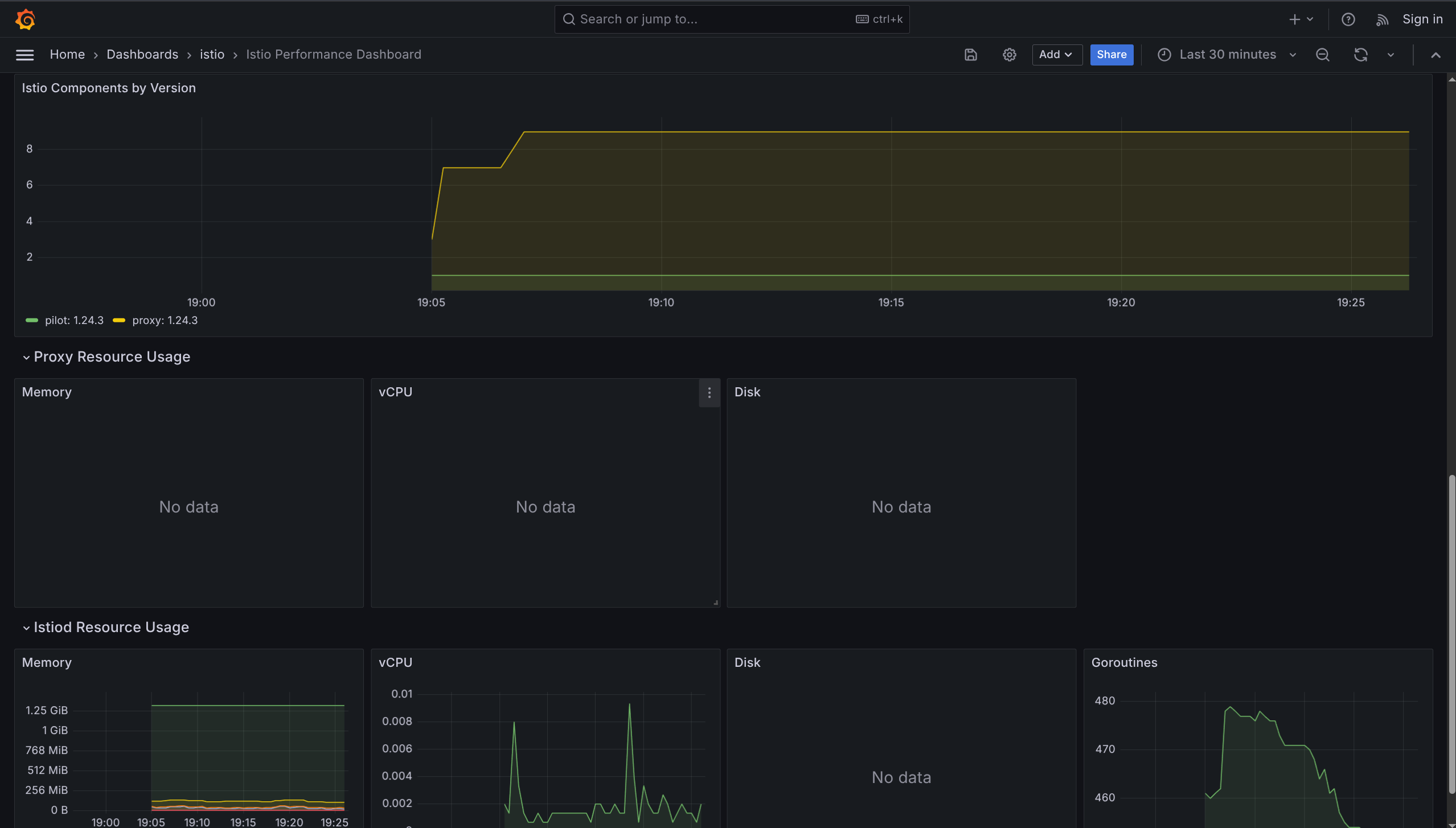

3. Istio Performance Dashboard

What it shows: How much resources Istio uses

Monitors:

- Resource utilization under load

- Overhead added by Istio

Use when: Checking if Istio is using too much CPU/memory

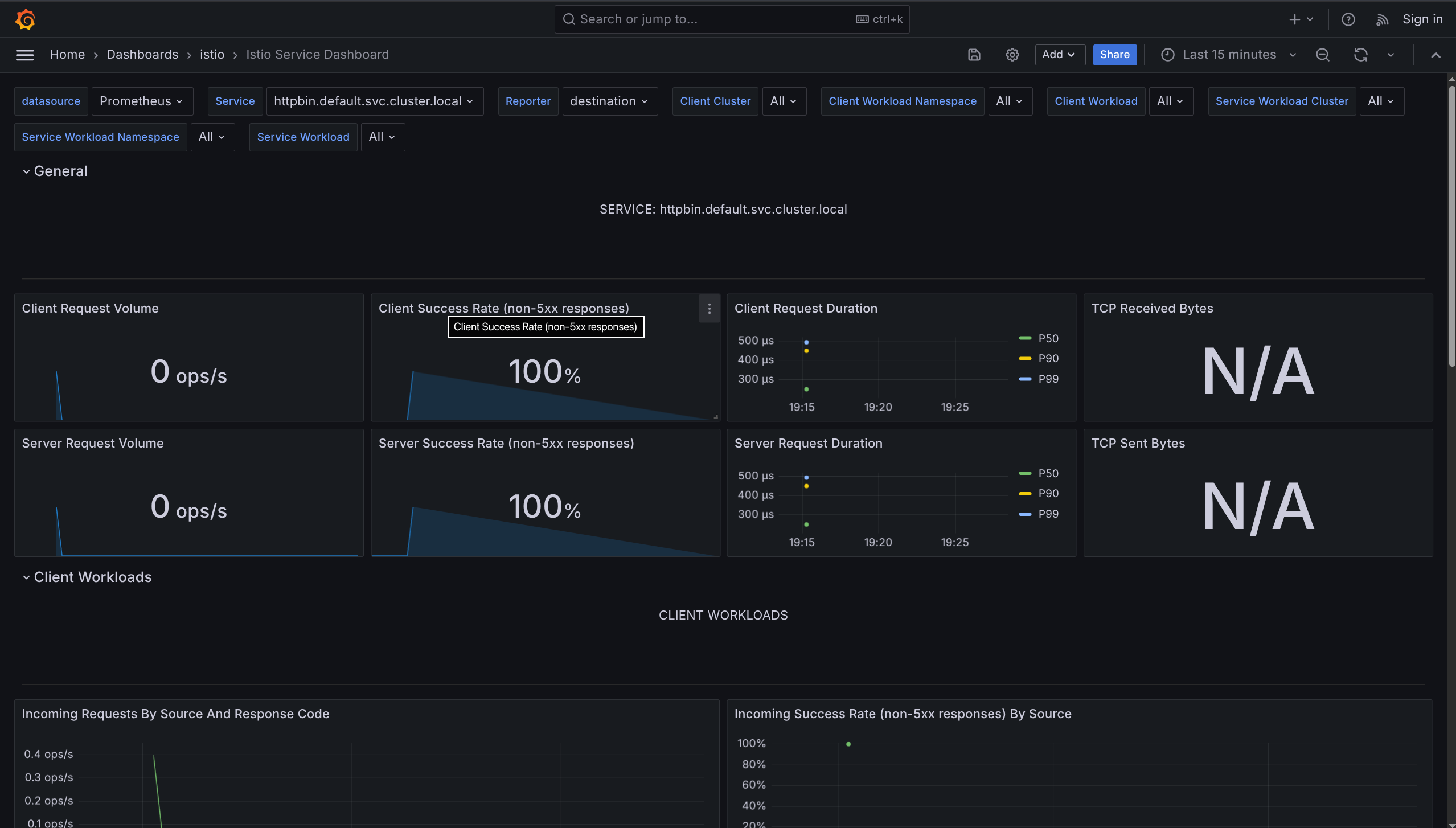

4. Istio Service Dashboard

What it shows: Details about ONE specific service

Monitors:

- Request volume

- Response codes (200, 404, 500, etc.)

- Request duration (latency)

- Traffic sources (who's calling this service)

Use when: Debugging specific service issues

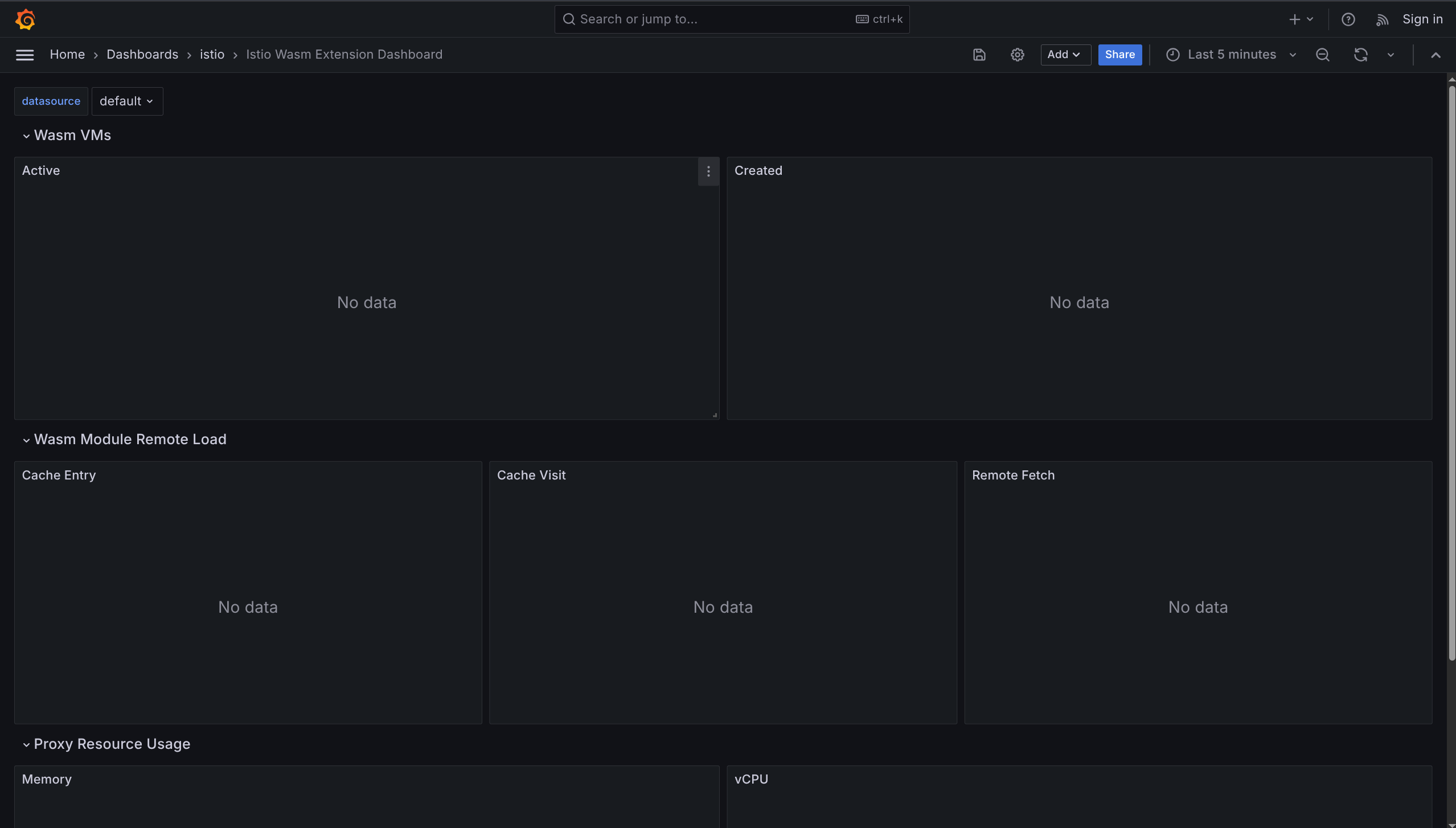

5. Istio Wasm Extension Dashboard

What it shows: WebAssembly extensions metrics

Monitors:

- Active Wasm VMs

- Created Wasm VMs

- Remote module fetching

- Proxy resource usage

Use when: Using Wasm plugins in Istio

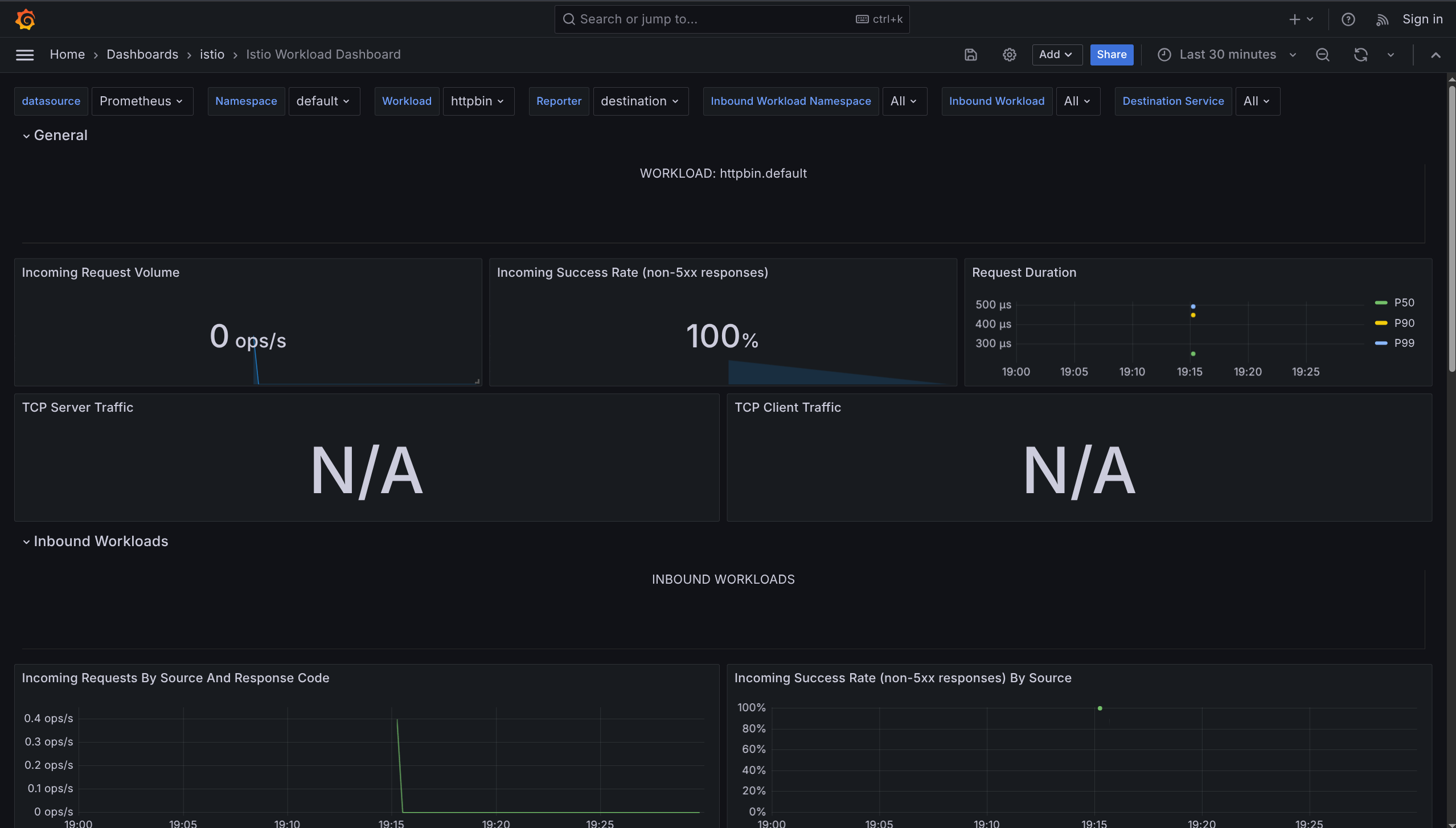

6. Istio Workload Dashboard

What it shows: Metrics for individual workloads (pods)

Monitors:

- Resource consumption per workload

- Traffic flow per workload

Use when: Checking specific pod performance

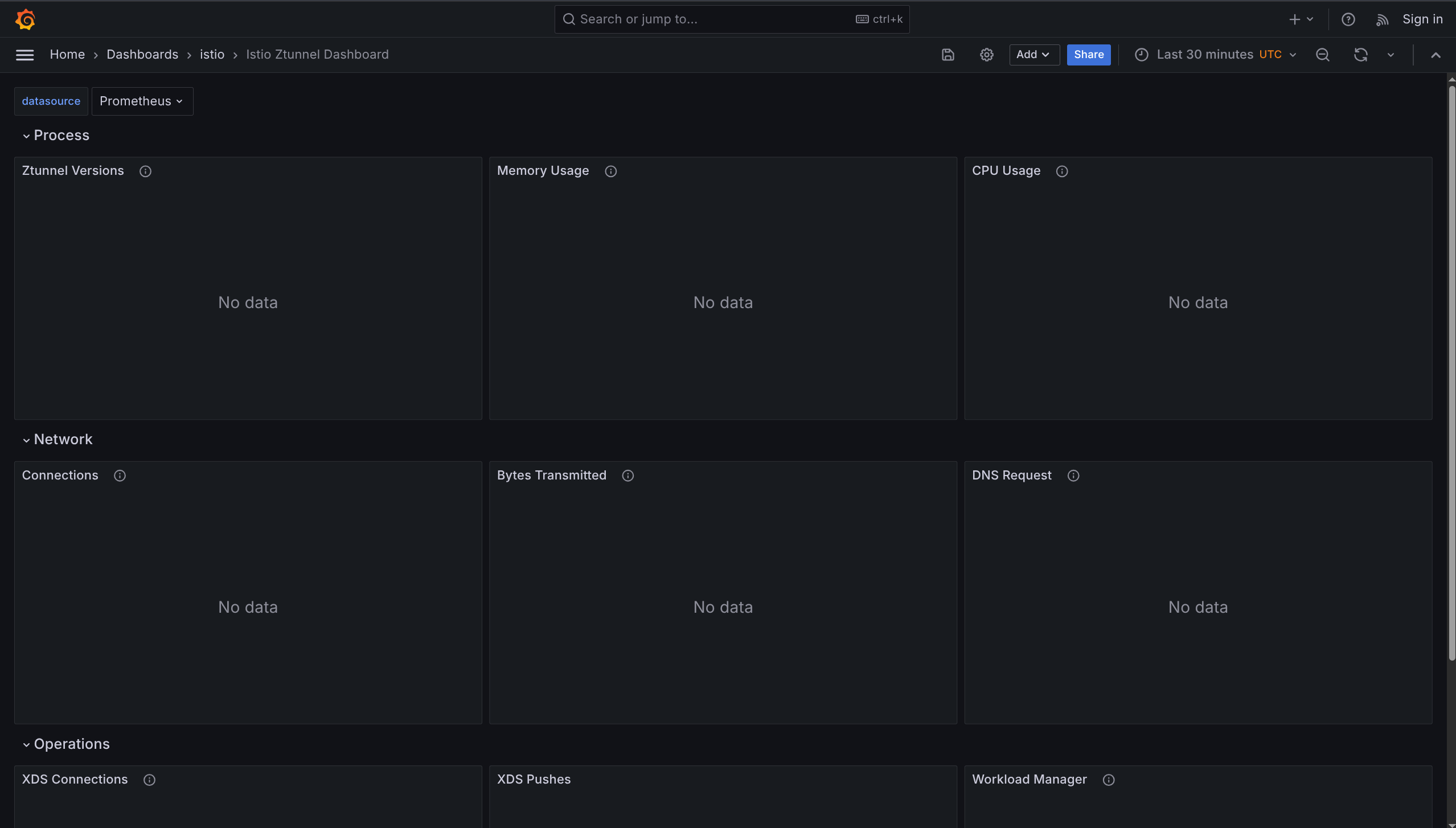

7. Istio Ztunnel Dashboard

What it shows: Ambient mode L4 proxy metrics

Monitors:

- ztunnel traffic interception

- L4 encryption metrics

Use when: Running Istio in ambient mode

Dashboard Comparison

| Dashboard | Focus | When to Use |

|---|---|---|

| Control Plane | Istio itself | Is Istio healthy? |

| Mesh | All services | Overall mesh health |

| Performance | Resource usage | Is Istio using too much? |

| Service | One service | Debug specific service |

| Wasm | Wasm plugins | Using Wasm extensions |

| Workload | Individual pods | Check pod performance |

| Ztunnel | Ambient mode | Using ambient mode |

Grafana = Pretty graphs for Prometheus data

Needs Prometheus = Must install Prometheus first

7 dashboards = Pre-configured for different monitoring needs

- Control Plane = Monitor Istio health

- Mesh = Monitor all services

- Service = Monitor one service

- Ztunnel = Monitor ambient mode

Zipkin - Distributed Tracing

What is Distributed Tracing?

Distributed tracing tracks a single request as it travels through multiple services - like following a package through different delivery stations.

Why needed: In microservices, one user request touches many services. Tracing shows the full journey.

How Tracing Works

Request Flow

1. Request enters mesh

↓

2. Envoy generates unique request ID

↓

3. ID stored in HTTP headers

↓

4. Each service forwards headers to next service

↓

5. All pieces connected into one trace

Collection by Mode

| Mode | Collection Method | Layer |

|---|---|---|

| Sidecar | Envoy proxies collect spans automatically | L4 + L7 |

| Ambient | ztunnel (L4) + waypoint proxies (L7) | L4 and L7 |

Key Concepts

Span

One piece of the trace representing work done by one service.

Contains:

- Name

- Start time

- End time

- Tags (metadata)

- Logs

Common Tags

| Tag | Description | Example |

|---|---|---|

istio.mesh_id | Which mesh | default |

istio.canonical_service | Service name | productpage |

http.url | URL called | /api/v1/users |

http.status_code | Response code | 200, 500 |

upstream_cluster | Destination cluster | reviews.default |

Trace

Collection of all spans for one request showing the full journey.

Headers to Propagate

Your application MUST forward these headers to the next service!

x-request-id

x-b3-traceid

x-b3-spanid

x-b3-parentspanid

x-b3-sampled

x-b3-flags

b3

Why: Istio can't connect requests automatically. Headers link them together.

How: Copy headers from incoming request → Add to outgoing request

Zipkin

What is Zipkin?

Zipkin is a tool to collect, store, and visualize distributed traces.

Purpose:

- See request flow

- Find slow services

- Debug issues

- Identify bottlenecks

Install Zipkin

1. Deploy Zipkin

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/addons/extras/zipkin.yaml

2. Configure Istio to Use Zipkin

cat <<EOF > ./tracing.yaml

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

meshConfig:

enableTracing: true

defaultConfig:

tracing: {}

extensionProviders:

- name: zipkin

zipkin:

service: zipkin.istio-system.svc.cluster.local

port: 9411

EOF

istioctl install -f ./tracing.yaml --skip-confirmation

3. Enable Tracing for Mesh

cat <<EOF | kubectl apply -f -

apiVersion: telemetry.istio.io/v1

kind: Telemetry

metadata:

name: mesh-default

namespace: istio-system

spec:

tracing:

- providers:

- name: zipkin

randomSamplingPercentage: 100.0

EOF

100.0 = Trace every request (for learning). In production, use lower % (like 1-10%).

Testing with Bookinfo

1. Enable Sidecar Injection

kubectl label namespace default istio-injection=enabled

2. Deploy Bookinfo

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/bookinfo/platform/kube/bookinfo.yaml

3. Deploy Gateway

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/bookinfo/networking/bookinfo-gateway.yaml

4. Generate Traffic

# Start tunnel (Minikube)

minikube tunnel

# Send 50 requests

for i in {1..50}; do

curl -s http://10.96.209.233/productpage > /dev/null

done

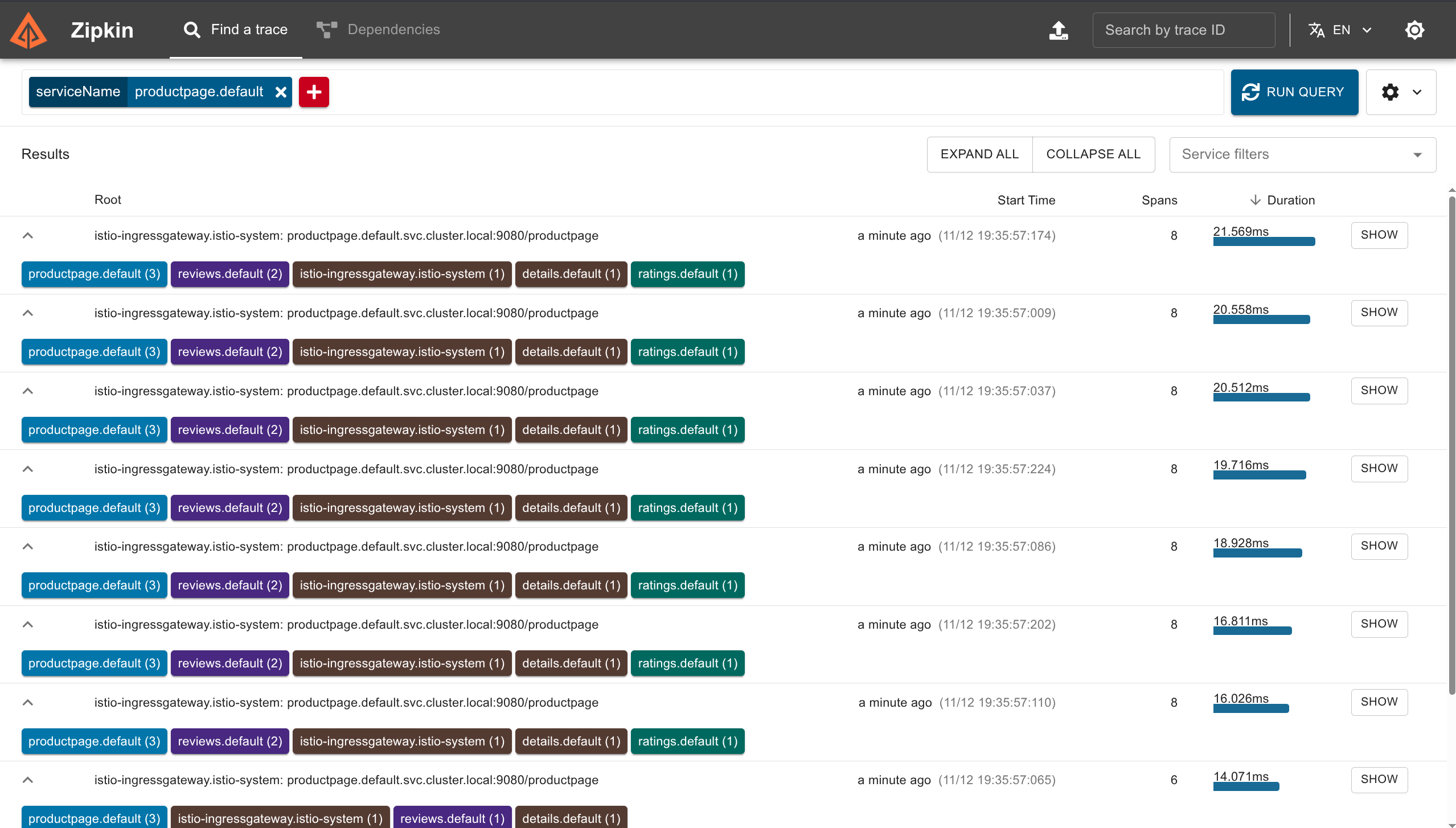

View Traces in Zipkin

Open Dashboard

istioctl dashboard zipkin

Then open browser: http://localhost:9411

Search for Traces

- Click serviceName dropdown

- Select

productpage.default - Click Search button

What You'll See

- List of traces (one per request)

- Each trace shows all services involved

- Click trace to see details

Trace Details Show

- Duration of each service call

- HTTP method (GET, POST)

- Status code (200, 500)

- Protocol used

- Full request path through services

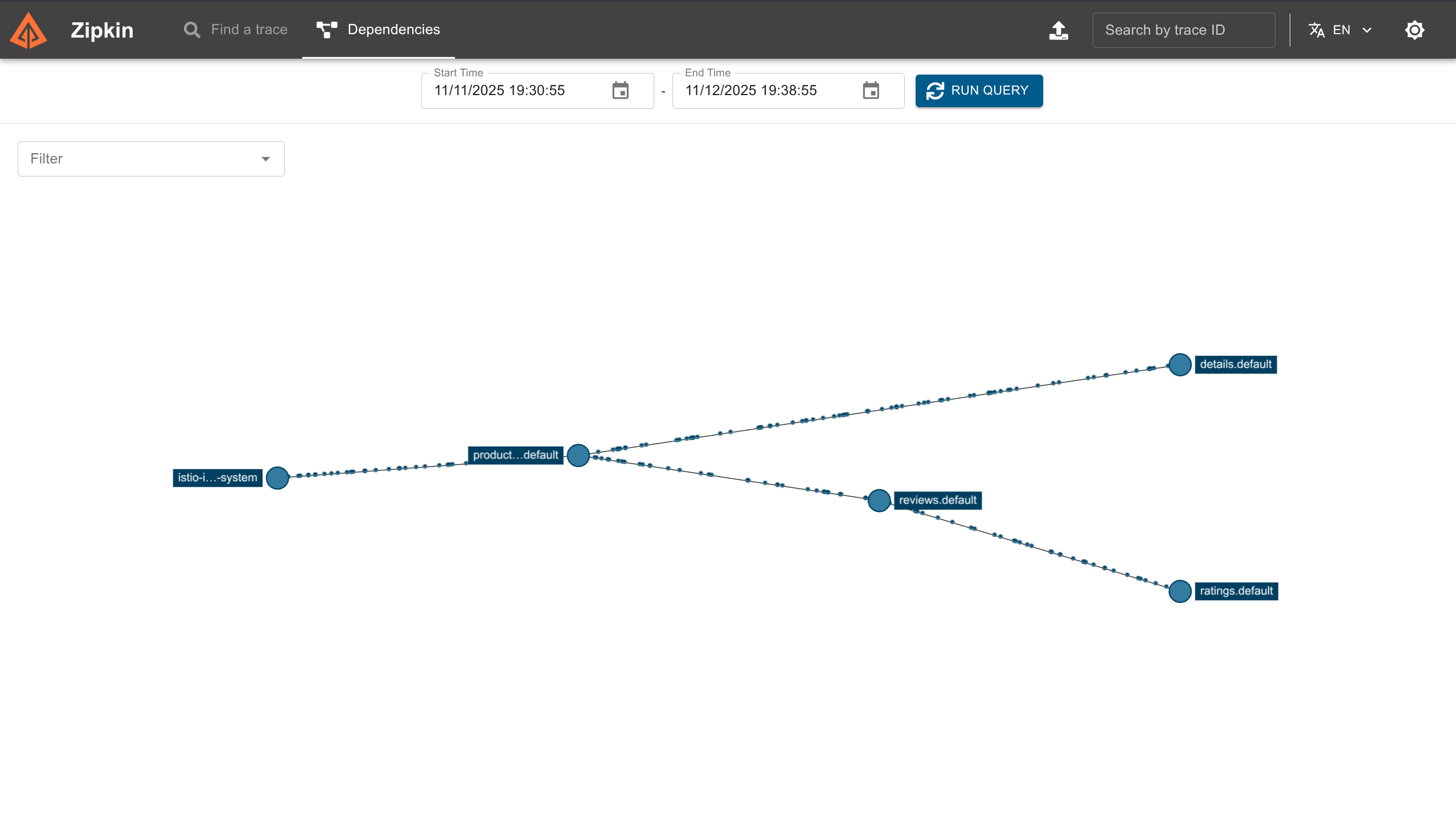

Dependencies View

What is Dependencies View?

A graph showing which services communicate with which services.

How to Access

- Click Dependencies tab in Zipkin

- Select time range

- Click Run Query

What You'll See

- Visual graph of service connections

- Arrows show request flow

- Helps find communication patterns

- Useful for debugging latency issues

Distributed Tracing = Track one request through many services

Span = One piece of work by one service

Trace = All spans together (full journey)

Headers = MUST propagate in your app code (x-b3-*, x-request-id)

Zipkin = Tool to see traces visually

Sampling = 100% for learning, 1-10% for production

Use for: Finding slow services, debugging errors, understanding request flow

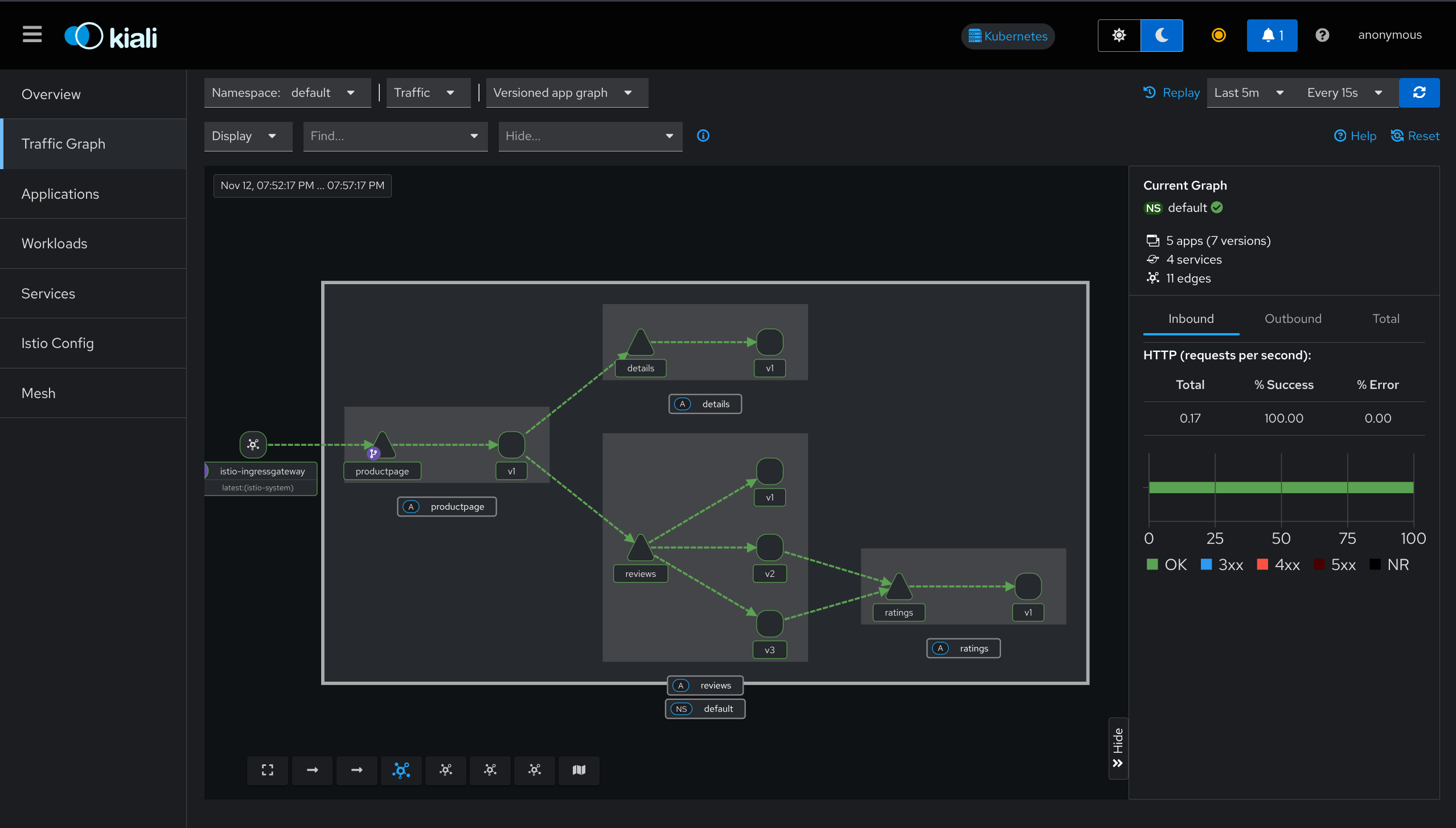

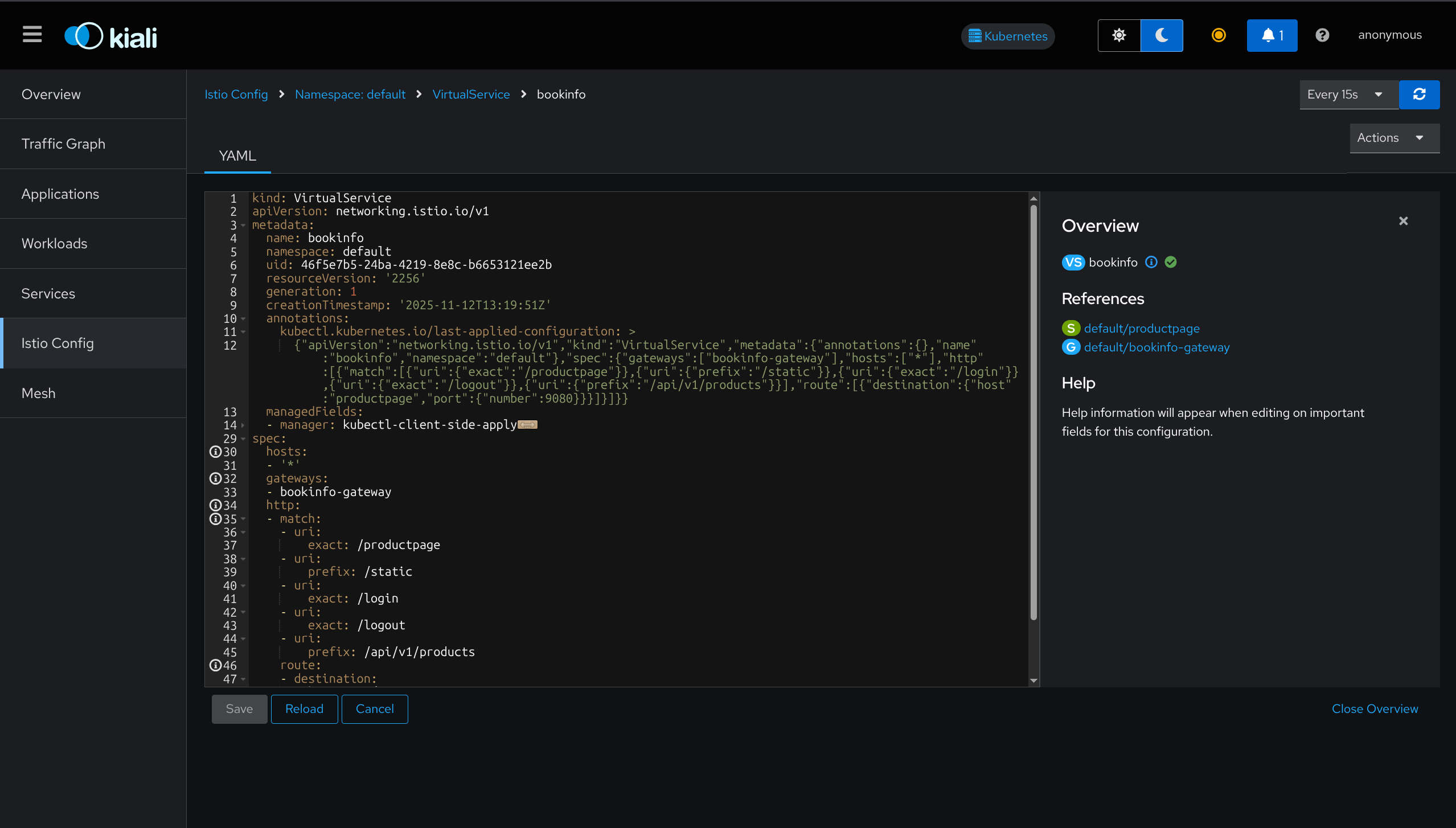

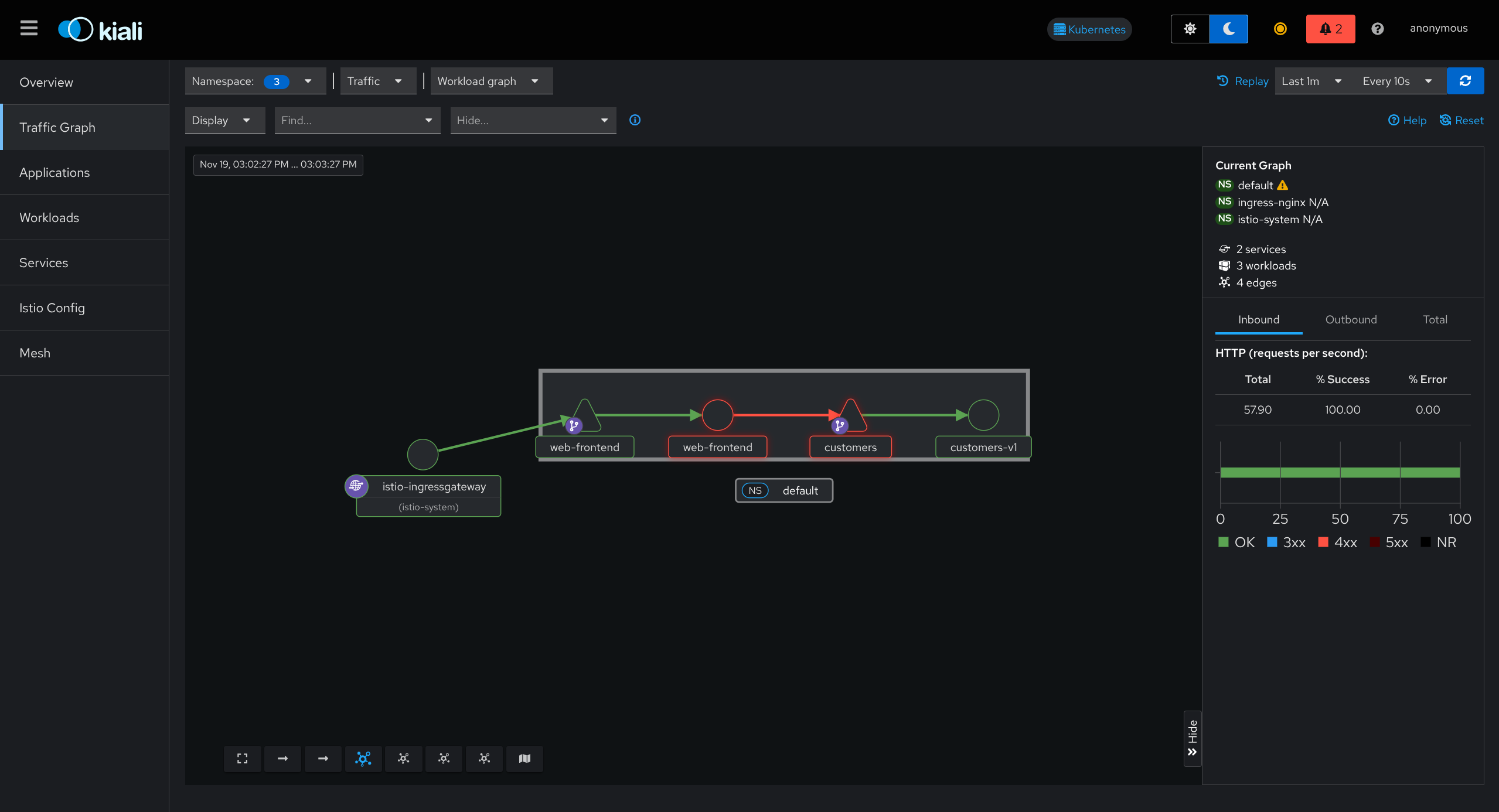

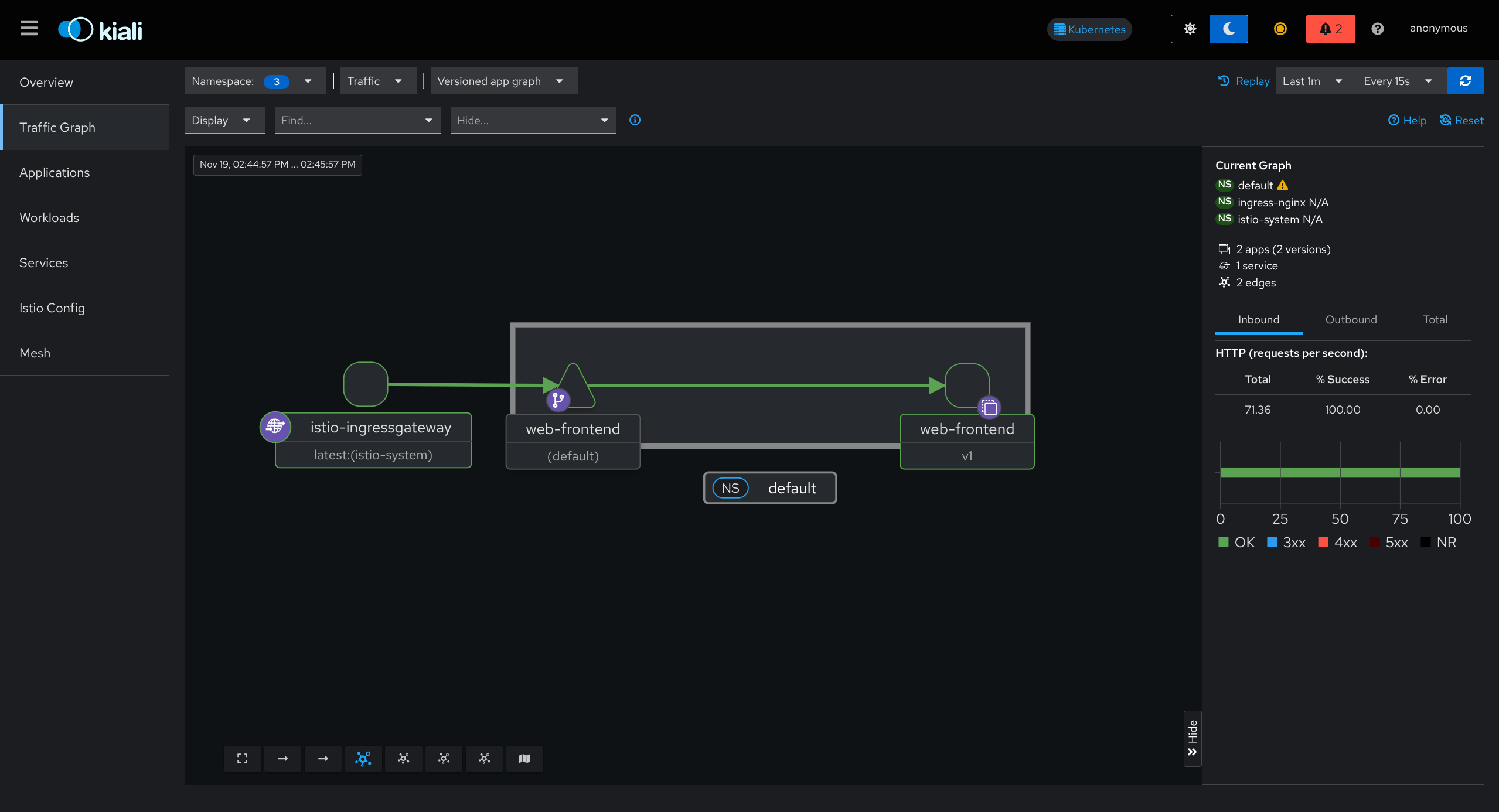

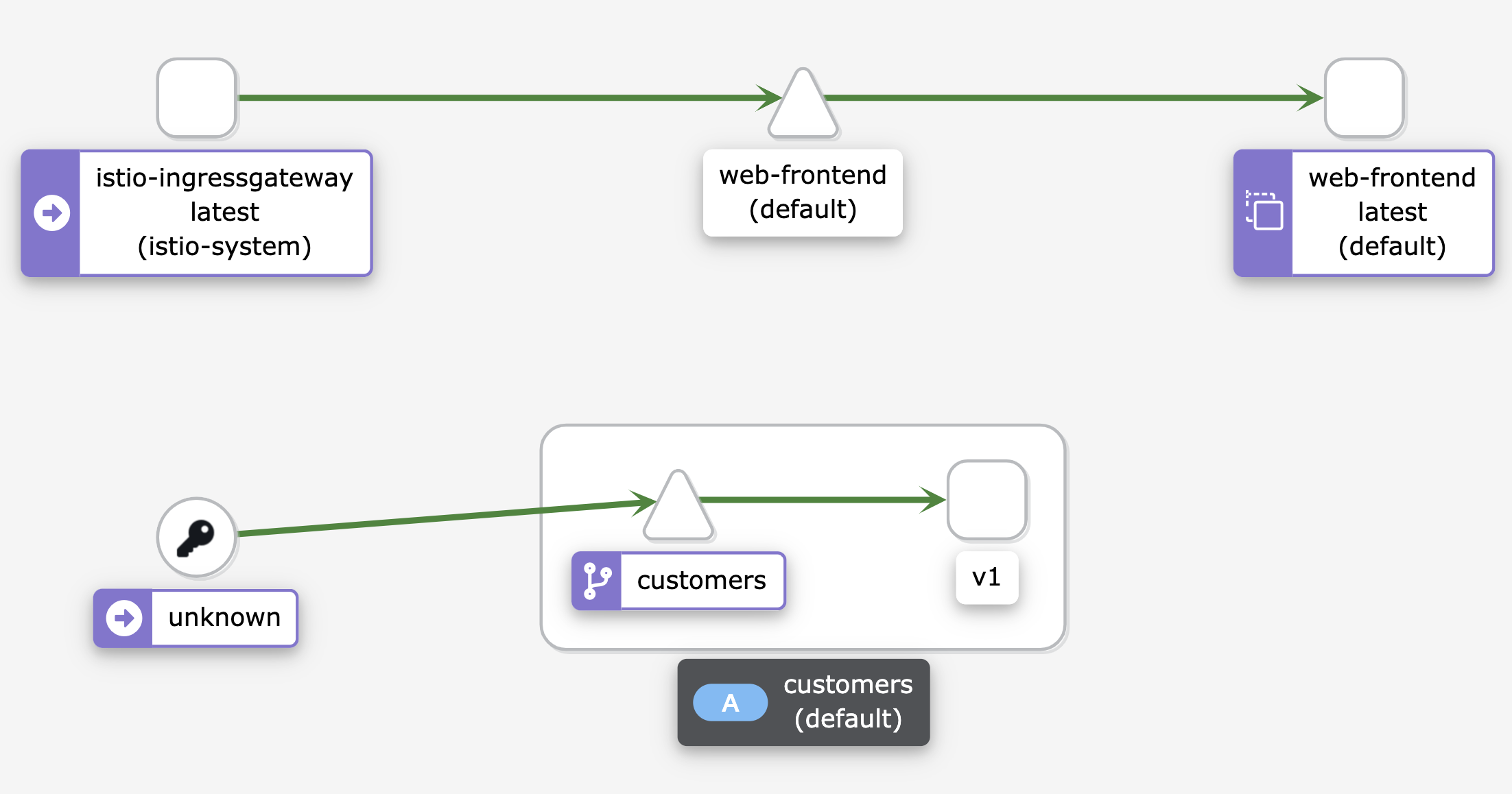

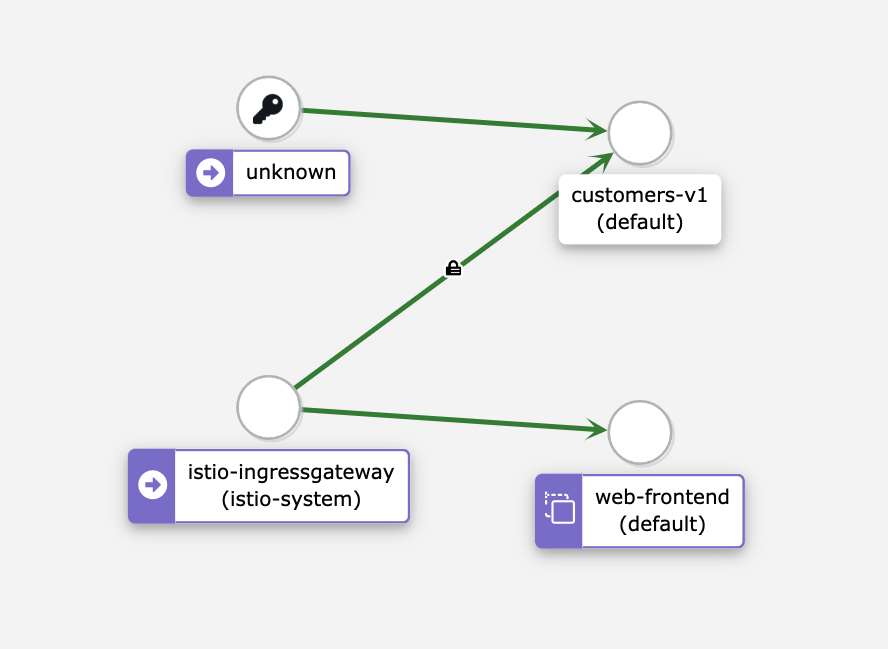

Kiali

What is Kiali?

Kiali is an observability and management console for Istio - like a control center with visual graphs showing your entire service mesh.

Purpose: See, manage, and troubleshoot your service mesh in one place.

Main Features

1. Service Mesh Topology Visualization

What it does: Shows visual graph of all services and how they communicate

- Dynamic service graph (updates in real-time)

- Shows service dependencies

- Displays request traces, latency, traffic flow

- Color-coded health status

Like: Google Maps for your services

2. Health Monitoring & Traffic Observability

What it does: Shows if services are healthy or having problems

- Real-time health status

- Color-coded alerts (green = good, red = problem)

- Shows traffic anomalies

- High error rates highlighted

- Failing requests shown clearly

Uses Prometheus for metrics (traffic, success rate, latency)

3. Istio Configuration Management

What it does: Create and edit Istio configs from UI (no YAML needed!)

Can configure:

- Traffic routing (send 10% to v2, 90% to v1)

- Circuit breaking (stop calling broken service)

- Fault injection (test how app handles errors)

- Request timeouts

Validates configs: Tells you if something is wrong before you apply it

4. Integration with Other Tools

- Jaeger: Click to see distributed traces

- Grafana: Click to see detailed metrics dashboards

Install Kiali

kubectl apply -f https://raw.githubusercontent.com/istio/istio/release-1.24/samples/addons/kiali.yaml

Install Prometheus, Grafana, and Jaeger first for full features.

Open Kiali Dashboard

istioctl dashboard kiali

Then open browser: http://localhost:20001

Understanding the Service Graph

Graph Elements

| Element | Represents | Details |

|---|---|---|

| Nodes | Services, workloads, or apps | Clickable for details |

| Edges | Traffic between services | Arrows show direction |

| Colors | Health status | Green/Yellow/Red |

Health Status Colors

- Green = Healthy

- Yellow = Warning

- Red = Error

Metrics Displayed

- Request rates (requests per second)

- Latency (response time)

- Error rates (failure percentage)

Graph Features

- Updates in real-time

- Can pause to analyze

- Can replay past traffic

- Click node to see details

- Click edge to see traffic details

Advanced Istio Configuration with Kiali

Kiali provides UI-driven Istio configuration management:

- Define traffic routing rules (canary deployments, weighted routing)

- Configure fault injection for testing resiliency

- Set up circuit breakers and request retries

- Apply mutual TLS policies and security settings

What You Can Do in Kiali

1. Traffic Routing

Set up canary deployments:

- Send 90% traffic to v1

- Send 10% traffic to v2 (testing new version)

2. Fault Injection

Test how app handles errors:

- Inject delays (make service slow)

- Inject errors (make service fail)

3. Circuit Breakers

Stop calling broken services automatically

4. Request Retries

Retry failed requests automatically

5. Security Settings

Apply mutual TLS (encrypt traffic between services)

6. Configuration Validation

Checks your Istio configs for mistakes before applying

Observability Tools Comparison

| Tool | What It Shows | Best For |

|---|---|---|

| Prometheus | Raw metrics (numbers) | Querying specific metrics |

| Grafana | Pretty graphs | Monitoring over time |

| Zipkin | Request traces | Following one request |

| Kiali | Visual service graph | Big picture + management |

Kiali = All-in-one dashboard for Istio

Visual graph = See all services and connections

Real-time = Updates as traffic flows

Management = Configure Istio from UI (no YAML!)

Integrations = Links to Jaeger (traces) and Grafana (metrics)

Color-coded = Green (good), Yellow (warning), Red (error)

Use for: Understanding mesh topology, finding problems, managing traffic routing

Istio Traffic Management

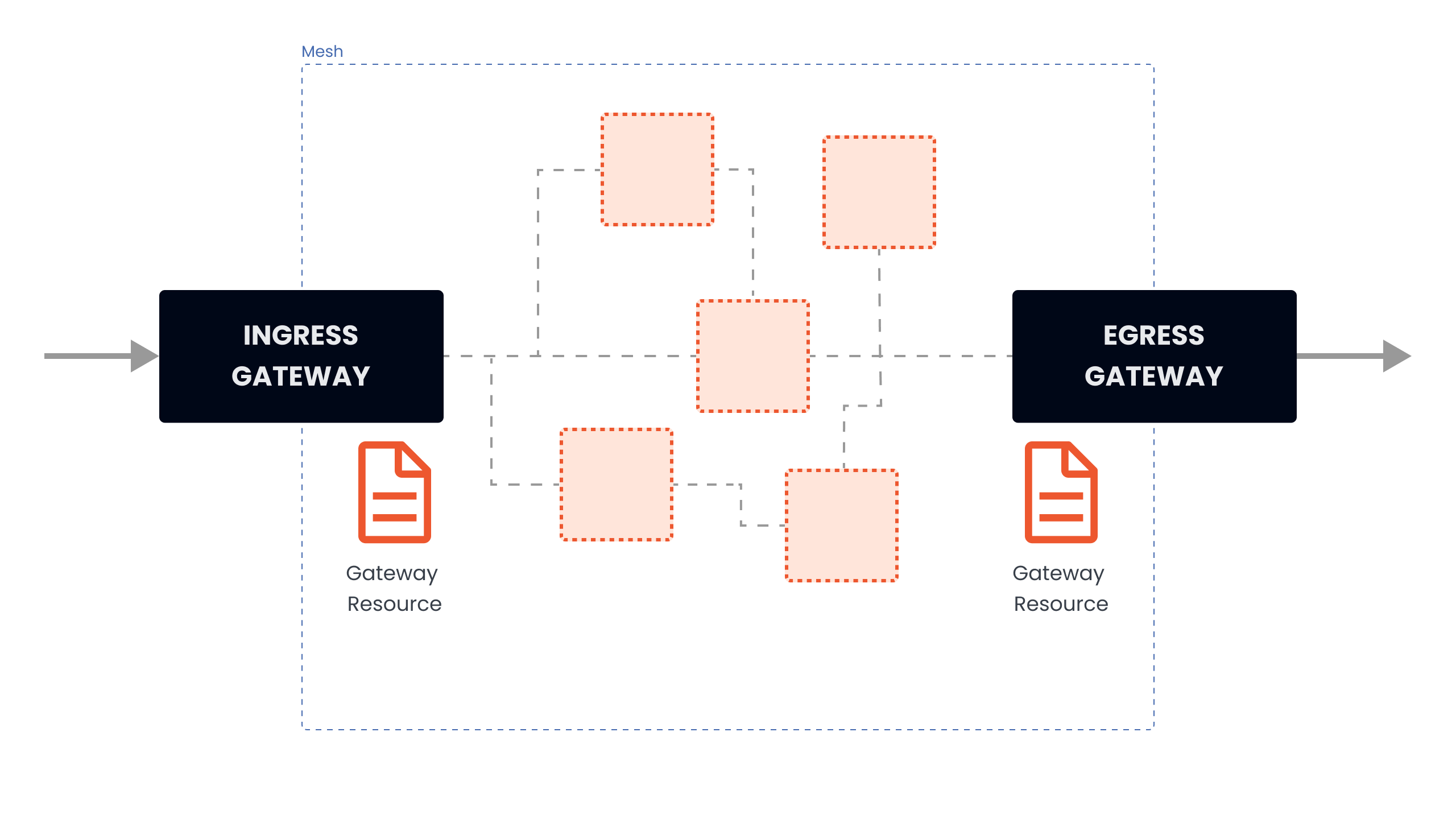

Gateways

What are Gateways?

Gateways are entry and exit points for traffic in your service mesh. They run Envoy proxy and act as load balancers at the edge of the mesh.

Purpose: Control how external traffic enters or leaves the service mesh.

Gateway Types

Ingress Gateway

Function: Receives traffic coming INTO the cluster from external sources

Characteristics:

- Exposes services to external clients

- Gets external IP address (LoadBalancer type)

- Handles inbound HTTP/HTTPS/TCP traffic

- Acts as entry point to the mesh

Egress Gateway

Function: Handles traffic going OUT of the cluster to external services

Characteristics:

- Controls outbound traffic from mesh

- Internal only (ClusterIP type)

- Optional component (not installed by default)

- Useful for security and monitoring

Analogy: Airport gates - ingress for arrivals, egress for departures

Deploying Gateways

Two deployment methods: istioctl profiles or Helm charts

Method 1: Using istioctl Profiles

# Default profile (ingress gateway only)

istioctl install --set profile=default

# Demo profile (both ingress + egress gateways)

istioctl install --set profile=demo

Method 2: Using Helm

# Install base

helm install istio-base istio/base -n istio-system

# Install istiod

helm install istiod istio/istiod -n istio-system --wait

# Install ingress gateway

helm install istio-ingress istio/gateway -n istio-system

# Install egress gateway

helm install istio-egress istio/gateway \

-n istio-system \

--set service.type=ClusterIP \

--set labels.app=istio-egress \

--set labels.istio=egressgateway

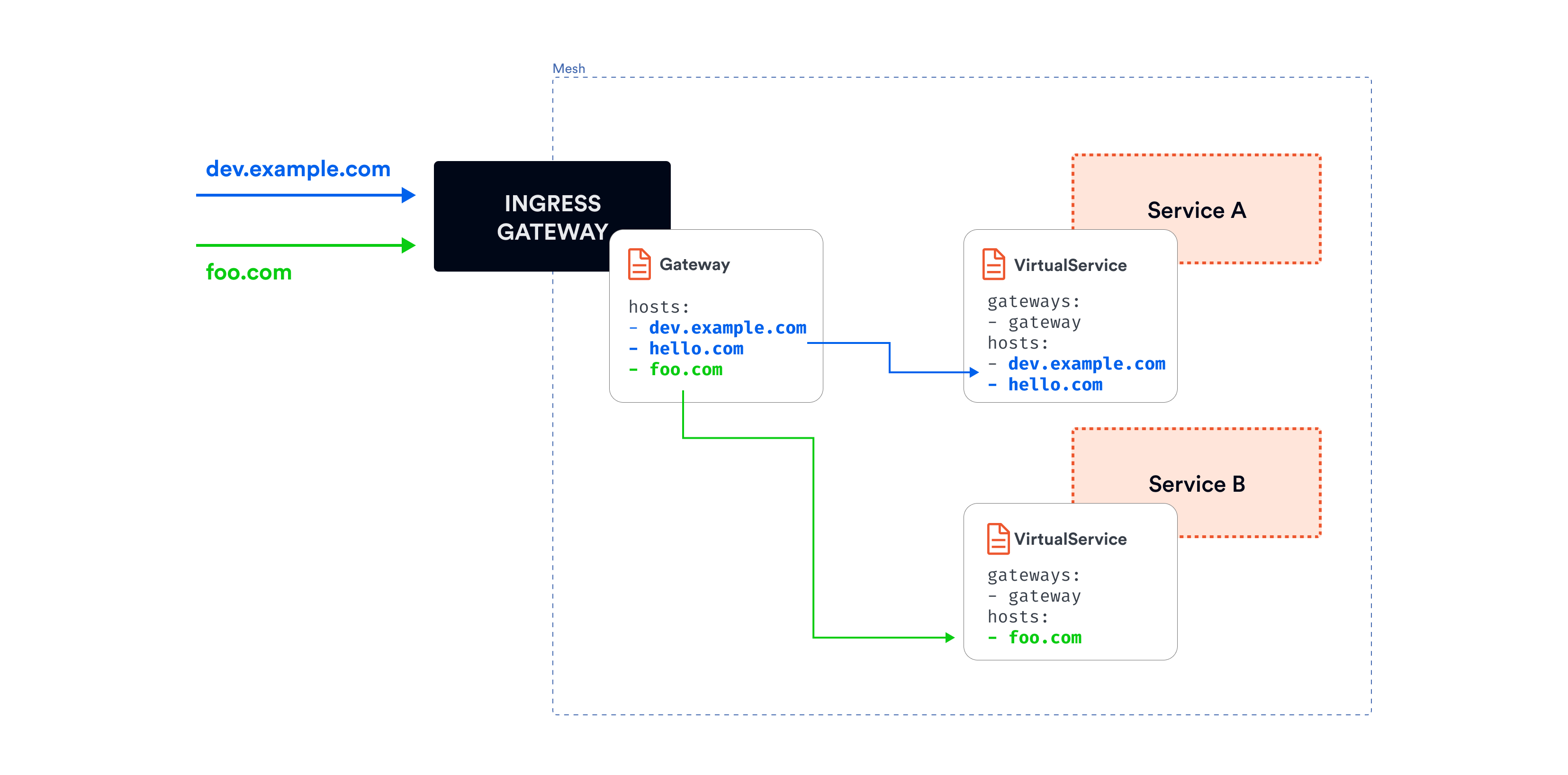

How Ingress Gateway Works

Purpose: Provides single external IP that routes traffic to different services based on hostname or path.

Traffic Flow:

---

External Client → Ingress Gateway (External IP) → VirtualService Rules → Internal Service

Example routing:

dev.example.com→ Service Atest.example.com→ Service B- All through ONE external IP address

Benefits:

- Single entry point for all external traffic

- Centralized TLS termination

- Simplified DNS management

- Unified security policies

Gateways = Entry and exit points for mesh traffic

Ingress Gateway = Handles incoming traffic (gets external IP)

Egress Gateway = Handles outgoing traffic (optional, internal only)

Two configuration approaches:

- Istio Gateway (traditional) + VirtualService

- Kubernetes Gateway API (modern) + HTTPRoute

Gateway alone = Opens the door (defines ports and protocols)

Routing rules = Direct traffic to services (VirtualService or HTTPRoute)

Use for: Exposing services externally, controlling outbound traffic, centralized traffic management

Gateway Configuration Approaches

Two configuration methods: Istio Gateway resource (traditional) or Kubernetes Gateway API (modern standard)

Approach 1: Istio Gateway Resource (Traditional)

What it does: Configures ports, protocols, and hostnames that the gateway accepts

Configuration example:

apiVersion: networking.istio.io/v1

kind: Gateway

metadata:

name: my-gateway

namespace: default

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- dev.example.com

- test.example.com

Configuration breakdown:

- selector: Targets

istio-ingressgatewaypods - port: Opens port 80 for HTTP traffic

- hosts: Accepts traffic for specified domains

- protocol: Defines traffic protocol (HTTP, HTTPS, TCP)

Important: Gateway alone only opens the door - it doesn't route traffic to services.

Requires VirtualService: Must create VirtualService to define WHERE traffic goes after entering the gateway.

Approach 2: Kubernetes Gateway API (Modern Standard)

What it is: Kubernetes-native, vendor-neutral way to configure gateways. Modern replacement for Ingress resource.

Advantages over traditional approach:

- Role-based: Different teams can manage different parts (separation of concerns)

- Extensible: Better support for advanced routing scenarios

- Portable: Works across different gateway implementations

- Standard: Kubernetes SIG-Network maintained specification

- Future-proof: Recommended for new deployments

Step 1: Install Gateway API CRDs

kubectl get crd gateways.gateway.networking.k8s.io &> /dev/null || \

{ kubectl kustomize "github.com/kubernetes-sigs/gateway-api/config/crd?ref=v1.2.1" | kubectl apply -f -; }

Step 2: Create Gateway Resource

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: my-k8s-gateway

namespace: default

spec:

gatewayClassName: istio

listeners:

- protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: Same

Configuration breakdown:

- gatewayClassName: Specifies Istio as the gateway implementation

- listeners: Defines protocol (HTTP) and port (80)

- allowedRoutes: Controls which namespaces can attach routes

- from: Same: Only routes from same namespace can attach

Step 3: Attach Routes with HTTPRoute

Purpose: Define routing rules (replaces VirtualService in Gateway API approach)

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: my-route

namespace: default

spec:

parentRefs:

- name: my-k8s-gateway

rules:

- matches:

- path:

type: Prefix

value: /hello

backendRefs:

- name: hello-service

port: 8080

Configuration breakdown:

- parentRefs: Attaches this route to

my-k8s-gateway - matches: Defines path matching rules (prefix

/hello) - backendRefs: Specifies target service and port

- Result: Requests to

/helloroute tohello-service:8080

Verifying Gateway Deployment

kubectl get svc -n istio-system

Expected output:

NAME TYPE EXTERNAL-IP

istio-ingressgateway LoadBalancer XX.XXX.XXX.XXX

istio-egressgateway ClusterIP <none>

istiod ClusterIP <none>

Service types explained:

- istio-ingressgateway: LoadBalancer type (gets external IP)

- istio-egressgateway: ClusterIP type (internal only)

- istiod: ClusterIP type (control plane, internal only)

External IP assignment:

- Cloud providers (AWS, GCP, Azure): External IP assigned automatically by cloud load balancer

- Minikube: Requires

minikube tunnelcommand to expose external IP - Kind/Docker Desktop: May require port forwarding or additional configuration

Egress Gateway Configuration

Purpose: Controls and monitors traffic leaving the mesh to external services.

Use cases:

- Centralized control: Single exit point for all outbound traffic

- Security policies: Apply consistent security rules to external calls

- Audit logging: Log all external service calls for compliance

- Access control: Restrict which external services can be accessed

- Traffic monitoring: Monitor and measure external dependencies

Configuration example:

apiVersion: networking.istio.io/v1

kind: Gateway

metadata:

name: egress-gateway

namespace: istio-system

spec:

selector:

istio: egressgateway

servers:

- port:

number: 443

name: https

protocol: HTTPS

hosts:

- external-service.com

Configuration breakdown:

- selector: Targets

istio-egressgatewaypods - port: Opens port 443 for HTTPS traffic

- hosts: Only allows traffic to

external-service.com - Result: Restricts outbound traffic to specific external service

Gateway Configuration Comparison

| Feature | Istio Gateway | Kubernetes Gateway API |

|---|---|---|

| Standard | Istio-specific | Kubernetes standard |

| Routing | VirtualService | HTTPRoute |

| Flexibility | Good | Better |

| Role-based | No | Yes |

| Future | Maintained | Recommended |

| Learning curve | Moderate | Easier |

| Maturity | Stable | Growing |

- New projects: Use Kubernetes Gateway API (modern standard)

- Existing Istio setups: Continue with Istio Gateway (fully supported)

- Complex routing: Both support advanced features

- Team collaboration: Gateway API offers better role separation ::: Better | | Role-based | No | Yes | | Future | Maintained | Recommended |

Best Practices

Gateway Selection:

- Use

defaultprofile for production (ingress only) - Use

demoprofile for learning (ingress + egress) - Consider resource requirements for your environment

Security:

- Always use HTTPS in production

- Configure proper TLS certificates

- Limit egress traffic to required external services only

Monitoring:

- Monitor gateway resource usage

- Set up alerts for gateway health

- Track ingress/egress traffic patterns

Gateway = Entry/exit point for mesh traffic

Ingress = Traffic coming IN (gets external IP)

Egress = Traffic going OUT (internal only)

Two approaches: Istio Gateway (traditional) or Kubernetes Gateway API (modern)

Gateway alone = Opens the door, needs routing rules

VirtualService/HTTPRoute = Defines where to send traffic

Use for: Exposing services to internet, controlling external calls, centralizing traffic management

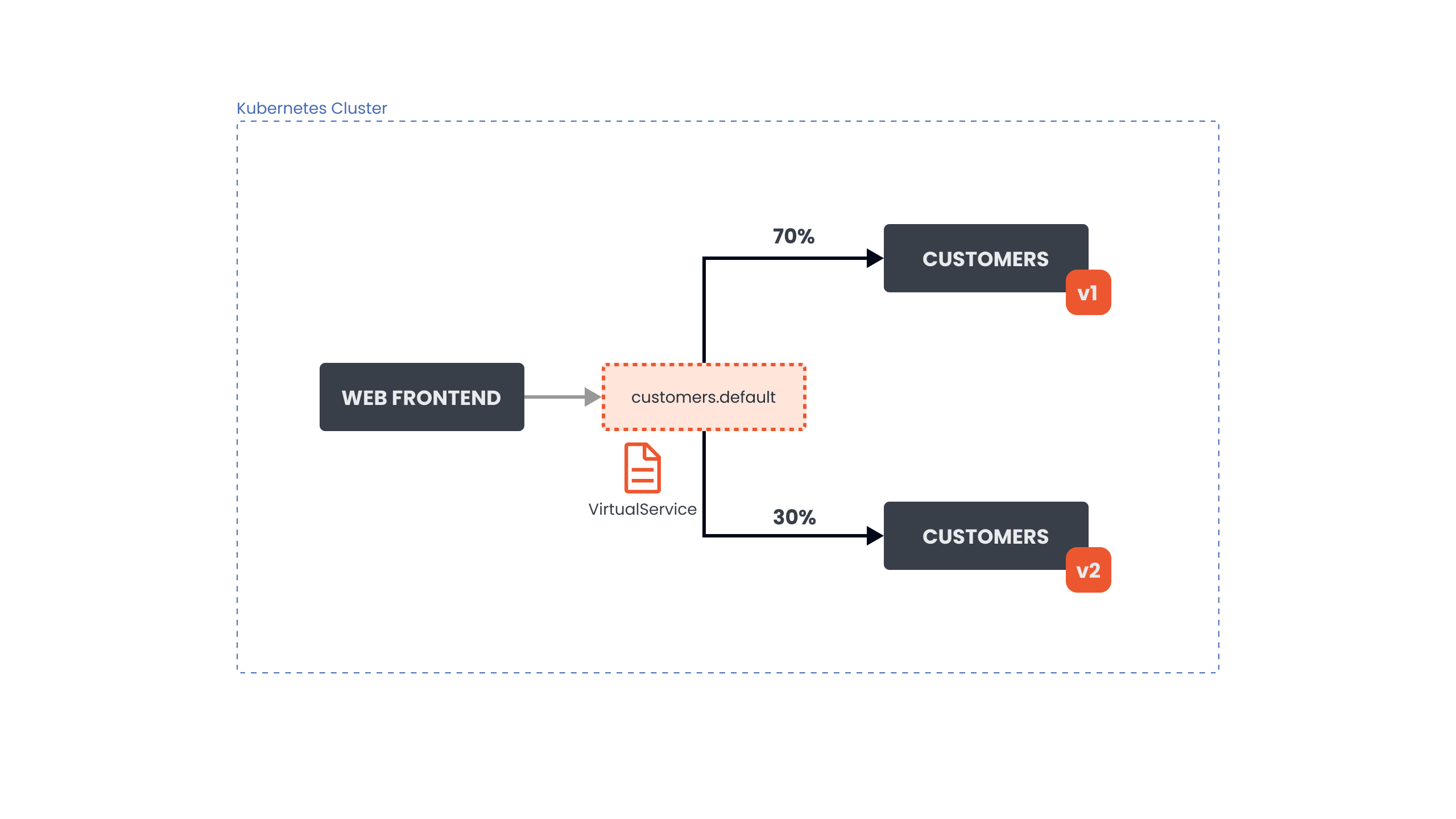

Simple Routing

What is Traffic Routing?

Traffic routing = Controlling where requests go based on rules and policies.

Purpose: Direct traffic to specific service versions for canary deployments, A/B testing, or gradual rollouts.

Analogy: Like a traffic cop directing cars to different roads based on destination.

Common Use Case

Scenario: You have 2 versions of an application deployed simultaneously:

- customers-v1 deployment (stable/old version)

- customers-v2 deployment (new version being tested)

- Goal: Split traffic between versions (e.g., 70% to v1, 30% to v2)

Why needed: Test new version with real traffic before full rollout, minimizing risk.

Traffic Routing Approaches

Two methods available: Kubernetes Gateway API (modern standard) or Istio VirtualService (traditional)

Approach 1: Using Kubernetes Gateway API (Modern Standard)

What it uses: HTTPRoute resource with weight-based traffic distribution

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: customers-route

spec:

parentRefs:

- name: example-gateway

namespace: default

hostnames:

- "customers.default.svc.cluster.local"

rules:

- backendRefs:

- name: customers-v1

port: 80

weight: 70

- name: customers-v2

port: 80

weight: 30

Configuration breakdown:

- parentRefs: Attaches route to

example-gateway - hostnames: Specifies target service hostname

- backendRefs: Defines backend services with weights

- weight: 70: Routes 70% of traffic to customers-v1

- weight: 30: Routes 30% of traffic to customers-v2

Important: Weights must sum to 100 for proper distribution

Approach 2: Using VirtualService (Istio Traditional)

What it uses: VirtualService resource with subset-based routing and weights

apiVersion: networking.istio.io/v1

kind: VirtualService

metadata:

name: customers-route

spec:

hosts:

- customers.default.svc.cluster.local

http:

- route:

- destination:

host: customers.default.svc.cluster.local

subset: v1

weight: 70

- destination:

host: customers.default.svc.cluster.local

subset: v2

weight: 30

Configuration breakdown:

- hosts: Specifies target service

- http.route: Defines routing rules

- destination.subset: References named subset (v1 or v2)

- weight: 70: Routes 70% of traffic to v1 subset

- weight: 30: Routes 30% of traffic to v2 subset

Note: Requires DestinationRule to define subsets (covered in next section)

Understanding VirtualService Key Fields

Field: hosts

Purpose: Specifies which service this VirtualService applies to

Format options:

- Full name:

customers.default.svc.cluster.local(explicit) - Short name:

customers(within same namespace) - Wildcard:

*.example.com(matches multiple services)

Example: hosts: ["customers.default.svc.cluster.local"]

Field: http

Purpose: Defines list of HTTP routing rules

Contains: Array of route configurations with destinations and weights

Structure: Each route can have match conditions, destinations, weights, and policies

Field: destination

Purpose: Specifies where to send the traffic

Required components:

- host: Target service name (e.g.,

customers.default.svc.cluster.local) - subset: Version identifier (e.g.,

v1,v2) - requires DestinationRule - port: Optional port specification

Field: weight

Purpose: Defines percentage of traffic to route to each destination

Rules:

- Must be integer between 0-100

- Total weights across all destinations must sum to 100

- If only one destination, weight defaults to 100 (can be omitted)

Example: weight: 70 means 70% of traffic goes to this destination

Binding VirtualService to Gateway

Purpose: Expose service through gateway to make it accessible from external clients

Configuration example:

apiVersion: networking.istio.io/v1

kind: VirtualService

metadata:

name: customers-route

spec:

hosts:

- customers.default.svc.cluster.local

gateways:

- my-gateway

http:

- route:

- destination:

host: customers.default.svc.cluster.local

subset: v1

weight: 70

Configuration breakdown:

- gateways: Binds VirtualService to gateway named

my-gateway - Effect: Traffic entering through

my-gatewayfollows these routing rules - Without gateways field: VirtualService only applies to mesh-internal traffic

- With gateways field: VirtualService applies to traffic from specified gateway

Host Matching Rules (Gateway + VirtualService)

Important: When VirtualService is attached to Gateway, host configuration must be compatible.

Matching logic: Gateway hosts act as a filter - VirtualService hosts must pass through this filter.

Host Matching Examples

| Gateway Hosts | VirtualService Hosts | Result | Explanation |

|---|---|---|---|

* | customers.default.svc.cluster.local | ✅ Works | Wildcard * allows all hosts |

customers.default.svc.cluster.local | customers.default.svc.cluster.local | ✅ Works | Exact match |

*.example.com | api.example.com | ✅ Works | Wildcard pattern match |

hello.default.svc.cluster.local | customers.default.svc.cluster.local | ❌ Fails | No match between hosts |

Rule: Gateway hosts define what traffic is accepted; VirtualService hosts define what traffic is routed.

Routing Methods Comparison

| Feature | HTTPRoute (Gateway API) | VirtualService (Istio) |

|---|---|---|

| Standard | Kubernetes standard | Istio-specific |

| Weight field | weight in backendRefs | weight in destination |

| Version handling | Different services | Subsets (via DestinationRule) |

| Gateway binding | parentRefs field | gateways field |

| Recommendation | Preferred for new projects | Fully supported, more features |

| Complexity | Simpler syntax | More advanced capabilities |

| Learning curve | Easier to learn | Steeper but more powerful |

| Portability | Works with any gateway | Istio-specific |

| Maturity | Growing adoption | Battle-tested |

Common Traffic Routing Use Cases

| Scenario | Weight Distribution | Purpose | Risk Level |

|---|---|---|---|

| Canary Deployment | 90% v1, 10% v2 | Test new version with minimal traffic | Low |

| Blue-Green Deployment | 100% v1 → 100% v2 | Complete instant switch | Medium |

| A/B Testing | 50% v1, 50% v2 | Compare versions with equal traffic | Medium |

| Gradual Rollout | 70% v1, 30% v2 → adjust over time | Slowly increase new version traffic | Low |

| Shadow Traffic | 100% v1 (+ mirror to v2) | Test without affecting users | Very Low |

Traffic Split Progression Example

Typical canary deployment progression:

Phase 1: 100% v1, 0% v2 (Initial state - v2 deployed but no traffic)

↓

Phase 2: 95% v1, 5% v2 (Initial canary - minimal exposure)

↓

Phase 3: 90% v1, 10% v2 (Increased canary - monitor metrics)

↓

Phase 4: 70% v1, 30% v2 (Significant traffic - validate performance)

↓

Phase 5: 50% v1, 50% v2 (Equal split - final validation)

↓

Phase 6: 10% v1, 90% v2 (Near completion - prepare for full rollout)

↓

Phase 7: 0% v1, 100% v2 (Complete migration - remove v1)

Decision points: Monitor error rates, latency, and business metrics at each phase before proceeding.

Best Practices

Weight Configuration:

- Always ensure weights sum to exactly 100

- Start with small percentages for new versions (5-10%)

- Increase gradually based on metrics and confidence

- Document weight changes and rationale

Monitoring:

- Watch error rates closely during traffic shifts

- Monitor latency for both versions

- Set up alerts for anomalies

- Use Grafana/Kiali for real-time visualization

Rollback Strategy:

- Keep previous version running during migration

- Have rollback plan ready (adjust weights back)

- Test rollback procedure in staging first

- Document rollback steps for team

Testing:

- Validate new version in staging before production

- Use small traffic percentages initially

- Monitor for at least 24 hours at each phase

- Verify business metrics, not just technical metrics

Traffic routing = Control where requests go based on percentage weights

Two approaches: HTTPRoute (Kubernetes standard, simpler) or VirtualService (Istio-specific, more features)

Weights = Percentage split that must sum to 100 (e.g., 70+30, 90+10)

Canary pattern = Gradual rollout starting with small percentage (5-10%) to new version

Gateway binding = Exposes service to external traffic through gateway

Host matching = Gateway hosts filter incoming traffic; VirtualService hosts define routing

Common use cases: Canary deployments, A/B testing, gradual rollouts, blue-green deployments

Key benefit = Zero-downtime deployments with easy rollback capability

Configuration-only = No application code changes required

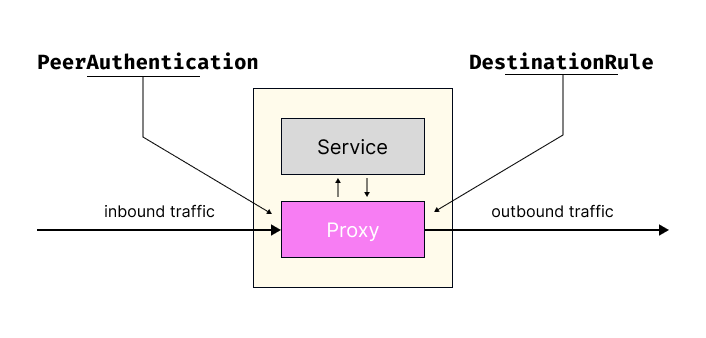

Subsets and DestinationRule

What are Subsets?

Subsets = Named groups of service instances (pods) representing different versions of the same service.

Purpose: Enable version-specific routing by grouping pods with common labels.

How it works: Uses pod labels to identify which instances belong to which subset.

Example scenario:

- Pods with label

version: v1→ subset v1 - Pods with label

version: v2→ subset v2 - Both subsets share same service name but represent different versions

Analogy: Like organizing books by edition - same title, different versions.

What is DestinationRule?

DestinationRule = Istio resource that defines subsets and traffic policies for a service.

Two main functions:

- Subset Definition: Group pods by labels (v1, v2, v3)

- Traffic Policies: Configure how traffic is handled (load balancing, connection limits, circuit breaking)

Relationship with VirtualService:

- VirtualService: Decides WHERE to send traffic (which subset)

- DestinationRule: Defines WHAT subsets exist and HOW to handle traffic

Basic DestinationRule Example

apiVersion: networking.istio.io/v1

kind: DestinationRule

metadata:

name: customers-destination

spec:

host: customers.default.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

Configuration breakdown:

- host: Target service (customers.default.svc.cluster.local)

- subsets: List of named subsets

- name: Subset identifier (v1, v2) - referenced by VirtualService

- labels: Pod label selector (version: v1 or version: v2)

What this does:

- Defines 2 subsets for

customersservice - Subset v1 = all pods with label

version: v1 - Subset v2 = all pods with label

version: v2 - Enables VirtualService to route to specific versions

Traffic Policies

Traffic policies = Configuration rules applied AFTER routing decisions are made, controlling HOW traffic is handled at the destination.

When applied: After VirtualService determines WHERE to send traffic, DestinationRule policies control HOW that traffic is processed.

Five policy types:

- Load Balancer Settings: Algorithm for distributing requests across pods

- Connection Pool Settings: Limits on concurrent connections and requests

- Outlier Detection: Circuit breaker for removing unhealthy instances

- Client TLS Settings: Encryption configuration for service-to-service communication

- Port Traffic Policy: Different policies for different ports

1. Load Balancer Settings

Purpose: Choose algorithm for distributing traffic across pods in a subset

Simple Load Balancing Algorithms

Configuration example:

spec:

host: customers.default.svc.cluster.local

trafficPolicy:

loadBalancer:

simple: ROUND_ROBIN

subsets:

- name: v1

labels:

version: v1

Available algorithms:

| Algorithm | Behavior | Best For | Example |

|---|---|---|---|

| ROUND_ROBIN | Distributes requests evenly in sequence | Even load distribution | Pod1 → Pod2 → Pod3 → Pod1 |

| LEAST_CONN | Routes to pod with fewest active connections | Varying request durations | Long-running requests |

| RANDOM | Selects pod randomly | Simple, stateless workloads | Quick requests |

| PASSTHROUGH | Uses original destination (no load balancing) | Direct pod access | Debugging |

Default behavior: If not specified, Istio uses ROUND_ROBIN.

Hash-Based Load Balancing (Session Affinity)

Purpose: Ensure same user always reaches same pod (sticky sessions)

Configuration example:

trafficPolicy:

loadBalancer:

consistentHash:

httpCookie:

name: location

ttl: 4s

Configuration breakdown:

- consistentHash: Enables hash-based routing

- httpCookie.name: Cookie name to hash (location)

- ttl: Cookie lifetime (4 seconds)

What this does: Routes requests with same cookie value to same pod consistently.

Hash options:

| Hash Type | Field | Use Case | Example |

|---|---|---|---|

| httpCookie | name, ttl | Session persistence | Shopping cart |

| httpHeaderName | Header name | User-based routing | x-user-id |

| httpQueryParameterName | Query param | URL-based routing | ?user=123 |

| useSourceIp | Boolean | IP-based routing | Geographic routing |

Use cases: Shopping carts, user sessions, stateful applications, WebSocket connections.

2. Connection Pool Settings

Purpose: Limit concurrent connections and requests to prevent service overload

Configuration example:

trafficPolicy:

connectionPool:

http:

http2MaxRequests: 50

http1MaxPendingRequests: 10

maxRequestsPerConnection: 2

Configuration breakdown:

- http2MaxRequests: Maximum concurrent HTTP/2 requests (50)

- http1MaxPendingRequests: Maximum queued HTTP/1.1 requests (10)

- maxRequestsPerConnection: Maximum requests per connection (2)

What this does: Limits concurrent requests to 50, protecting service from overload.

Key fields:

| Field | Protocol | Purpose | Example Value |

|---|---|---|---|

| http1MaxPendingRequests | HTTP/1.1 | Queue limit for pending requests | 10 |

| http2MaxRequests | HTTP/2 | Concurrent request limit | 50 |

| maxRequestsPerConnection | Both | Requests per connection | 2 |

| maxRetries | Both | Concurrent retry limit | 3 |

Use cases: Protecting services from traffic spikes, preventing resource exhaustion, capacity management.

3. Outlier Detection (Circuit Breaker)

Purpose: Automatically remove unhealthy pods from load balancing pool, allowing them time to recover

Configuration example:

trafficPolicy:

connectionPool:

http:

http2MaxRequests: 500

maxRequestsPerConnection: 10

outlierDetection:

consecutiveErrors: 10

interval: 5m

baseEjectionTime: 10m

maxEjectionPercent: 50

Configuration breakdown:

- consecutiveErrors: Failure threshold before ejection (10 errors)

- interval: Health check frequency (every 5 minutes)

- baseEjectionTime: Minimum ejection duration (10 minutes)

- maxEjectionPercent: Maximum percentage of hosts that can be ejected (50%)

What this does:

- Monitors pod health every 5 minutes

- If pod fails 10 consecutive times → eject from pool

- Keep ejected for minimum 10 minutes

- Never eject more than 50% of pods (prevents cascading failure)

Key fields:

| Field | Purpose | Example Value | Impact |

|---|---|---|---|

| consecutiveErrors | Failure threshold | 10 | Sensitivity to failures |

| interval | Check frequency | 5m | Detection speed |

| baseEjectionTime | Minimum ejection duration | 10m | Recovery time |

| maxEjectionPercent | Maximum ejection percentage | 50 | Availability protection |

| consecutive5xxErrors | HTTP 5xx threshold | 5 | Server error sensitivity |

Analogy: Like benching an injured player - remove from rotation, allow recovery time, then return to game.

Use cases: Automatic failure handling, self-healing systems, preventing cascading failures.

4. Client TLS Settings

Purpose: Configure TLS/SSL encryption for service-to-service communication

Configuration example:

trafficPolicy:

tls:

mode: MUTUAL

clientCertificate: /etc/certs/cert.pem

privateKey: /etc/certs/key.pem

caCertificates: /etc/certs/ca.pem

Configuration breakdown:

- mode: TLS mode (MUTUAL = two-way authentication)

- clientCertificate: Client certificate path

- privateKey: Private key path

- caCertificates: Certificate authority certificates

TLS modes:

| Mode | Description | Authentication | Use Case |

|---|---|---|---|

| DISABLE | No encryption | None | Development only |

| SIMPLE | One-way TLS | Server only | Public APIs |

| MUTUAL | Two-way TLS | Both client and server | Internal services |

| ISTIO_MUTUAL | Istio-managed mTLS | Automatic | Recommended for mesh |

Recommendation: Use ISTIO_MUTUAL for automatic certificate management within the mesh.

Use cases: Secure service-to-service communication, compliance requirements, zero-trust networking.

5. Port Traffic Policy

Purpose: Apply different traffic policies to different ports on the same service

Configuration example:

trafficPolicy:

portLevelSettings:

- port:

number: 80

loadBalancer:

simple: LEAST_CONN

- port:

number: 8000

loadBalancer:

simple: ROUND_ROBIN

- port:

number: 443

connectionPool:

http:

http2MaxRequests: 100

Configuration breakdown:

- Port 80: Uses LEAST_CONN load balancing

- Port 8000: Uses ROUND_ROBIN load balancing

- Port 443: Limits to 100 concurrent HTTP/2 requests

What this does: Applies different traffic policies based on port number.

Use cases:

- Different load balancing for HTTP vs HTTPS

- Stricter limits on admin ports

- Custom policies for metrics endpoints

- Port-specific connection limits

How VirtualService and DestinationRule Work Together

Traffic flow sequence:

1. Request arrives at service mesh

↓

2. VirtualService evaluates routing rules (WHERE to send)

↓

3. VirtualService selects destination subset (e.g., v1 or v2)

↓

4. DestinationRule defines subset membership (pods with version: v1)

↓

5. DestinationRule applies traffic policies (HOW to send)

↓

6. Request routed to selected pod with configured policies

Example scenario:

VirtualService says:

- "Send 70% of traffic to subset v1"

- "Send 30% of traffic to subset v2"

DestinationRule says:

- "Subset v1 = pods with label version:v1"

- "Use ROUND_ROBIN load balancing for v1"

- "Limit to 50 concurrent requests for v1"

Result: 70% of traffic goes to v1 pods, distributed via ROUND_ROBIN, with 50 request limit.

Key principle: VirtualService handles routing decisions (WHERE), DestinationRule handles subset definitions and policies (WHAT and HOW).

Traffic Policy Scope

Global vs Subset-Level Policies:

spec:

host: customers.default.svc.cluster.local

trafficPolicy: # Global policy (applies to all subsets)

loadBalancer:

simple: ROUND_ROBIN

subsets:

- name: v1

labels:

version: v1

trafficPolicy: # Subset-specific policy (overrides global)

loadBalancer:

simple: LEAST_CONN

- name: v2

labels:

version: v2

# Inherits global ROUND_ROBIN policy

Policy precedence: Subset-level policies override global policies.

Traffic Policy Reference

| Policy Type | Purpose | Key Configuration | Use Case |

|---|---|---|---|

| Load Balancer | Distribute traffic across pods | simple: ROUND_ROBIN | Even distribution |

| Connection Pool | Limit concurrent connections | http2MaxRequests: 50 | Prevent overload |

| Outlier Detection | Remove unhealthy instances | consecutiveErrors: 10 | Circuit breaking |

| TLS Settings | Encryption configuration | mode: ISTIO_MUTUAL | Secure communication |

| Port Policy | Port-specific rules | portLevelSettings | Different port behaviors |

Configuration Examples by Use Case

High-Traffic Service

trafficPolicy:

connectionPool:

http:

http2MaxRequests: 1000

maxRequestsPerConnection: 10

loadBalancer:

simple: LEAST_CONN

Stateful Application

trafficPolicy:

loadBalancer:

consistentHash:

httpCookie:

name: session-id

ttl: 3600s

Resilient Service

trafficPolicy:

connectionPool:

http:

http2MaxRequests: 100

outlierDetection:

consecutiveErrors: 5

interval: 30s

baseEjectionTime: 30s

Best Practices

Subset Management:

- Use consistent labeling strategy across all deployments (

version: v1,version: v2) - Keep subset names simple and descriptive (v1, v2, canary, stable)

- Document subset purposes and version differences

- Ensure pod labels match DestinationRule subset selectors

Load Balancing:

- Use

ROUND_ROBINfor even distribution across pods (default choice) - Use

LEAST_CONNfor requests with varying processing times - Use

consistentHashfor session affinity requirements (stateful apps) - Test load balancing behavior under realistic traffic patterns

Connection Pool Settings:

- Start with conservative limits based on service capacity

- Monitor connection pool metrics (queue depth, rejections)

- Adjust limits gradually based on observed behavior

- Consider downstream service capacity when setting limits

Circuit Breaking:

- Set conservative thresholds initially (5-10 consecutive errors)

- Monitor ejection rates and adjust based on false positives

- Never eject more than 50% of instances (prevent cascading failures)

- Balance sensitivity vs availability

TLS Configuration:

- Use

ISTIO_MUTUALfor automatic certificate management - Only use

DISABLEin development environments - Regularly rotate certificates for

MUTUALmode - Monitor certificate expiration dates

Testing:

- Test subset routing before production deployment

- Verify traffic policies under load

- Validate circuit breaker behavior with failure injection

- Monitor metrics after configuration changes

Common Patterns

Pattern 1: Canary Deployment with Circuit Breaking

spec:

host: customers.default.svc.cluster.local

trafficPolicy:

connectionPool:

http:

http2MaxRequests: 100

outlierDetection:

consecutiveErrors: 5

interval: 30s

baseEjectionTime: 30s

subsets:

- name: stable

labels:

version: v1

- name: canary

labels:

version: v2

Pattern 2: Session Affinity for Stateful Apps

spec:

host: app.default.svc.cluster.local

trafficPolicy:

loadBalancer:

consistentHash:

httpCookie:

name: session-id

ttl: 3600s

subsets:

- name: v1

labels:

version: v1

Pattern 3: Different Policies per Subset

spec:

host: api.default.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

trafficPolicy:

connectionPool:

http:

http2MaxRequests: 50 # Conservative for old version

- name: v2

labels:

version: v2

trafficPolicy:

connectionPool:

http:

http2MaxRequests: 200 # Higher for optimized version

Subset = Named group of pods representing a service version (identified by labels)

DestinationRule = Defines subsets AND traffic policies (WHAT exists and HOW to handle)

VirtualService = Defines routing rules (WHERE to send traffic)

Relationship: VirtualService routes to subsets defined by DestinationRule

Traffic policies = Applied AFTER routing decisions (load balancing, limits, circuit breaking)

Five policy types:

- Load Balancer = Distribution algorithm (ROUND_ROBIN, LEAST_CONN, consistentHash)

- Connection Pool = Concurrent request limits (prevent overload)

- Outlier Detection = Circuit breaker (remove unhealthy pods)

- TLS Settings = Encryption configuration (ISTIO_MUTUAL recommended)

- Port Policy = Port-specific configurations

Policy scope = Global (all subsets) or subset-specific (overrides global)

Use cases: Version management, canary deployments, session affinity, resilience, security

Configuration-only = No application code changes required

Resiliency

What is Resiliency?

Resiliency = The ability to maintain service availability and functionality even when failures occur. It's not about preventing failures, but handling them gracefully to minimize impact.

Core principle: Failures are inevitable in distributed systems - design for failure, not perfection.

Goal: Minimize downtime, prevent cascading failures, and maintain acceptable service levels during partial system failures.

Analogy: Like having airbags in a car - you hope never to need them, but they're critical when accidents happen.

Istio Resiliency Mechanisms

Four main mechanisms for building resilient applications:

| Mechanism | Purpose | Configuration | Impact |

|---|---|---|---|

| Timeouts | Prevent indefinite waiting | VirtualService | Fail fast |

| Retries | Handle transient failures | VirtualService | Automatic recovery |

| Circuit Breaking | Prevent overload | DestinationRule | Load protection |

| Outlier Detection | Remove unhealthy instances | DestinationRule | Self-healing |

Key benefit: All mechanisms are configured declaratively - no application code changes required.

1. Timeouts

Purpose: Prevent requests from waiting indefinitely for slow or unresponsive services

Why needed: Without timeouts, slow services can block threads, exhaust resources, and cause cascading failures throughout the system.

Configuration example:

apiVersion: networking.istio.io/v1

kind: VirtualService

metadata:

name: customers-service

namespace: default

spec:

hosts:

- customers.default.svc.cluster.local

http:

- route:

- destination:

host: customers.default.svc.cluster.local

subset: v1

timeout: 10s

Configuration breakdown:

- timeout: 10s: Maximum wait time for response (10 seconds)

- Applies to: Entire request-response cycle

- On timeout: Returns HTTP 504 (Gateway Timeout) to caller

- Connection: Remains open unless circuit breaker triggers

What this does:

- Waits maximum 10 seconds for service response

- If no response within 10s → Returns timeout error

- Prevents indefinite blocking of client threads

- Allows client to handle timeout gracefully

Timeout values guidance:

| Service Type | Recommended Timeout | Rationale |

|---|---|---|

| Fast APIs | 1-3s | Quick operations, fail fast |

| Standard APIs | 5-10s | Normal processing time |

| Database queries | 10-30s | Complex queries may take longer |

| Batch operations | 30-60s | Long-running operations |

| External APIs | 15-30s | Account for network latency |

Use cases: Preventing slow services from blocking resources, failing fast, maintaining system responsiveness.

2. Retries

Purpose: Automatically retry failed requests to handle transient failures without manual intervention

When to use: Transient network issues, temporary service unavailability, intermittent errors

Critical requirement: Only use for idempotent operations (safe to repeat without side effects)

Idempotent operations:

- ✅ Safe: GET, HEAD, OPTIONS, PUT (with same data), DELETE (same resource)

- ❌ Unsafe: POST (creates new resources), non-idempotent PUT operations

Why idempotency matters: Retrying non-idempotent operations can cause duplicate actions (e.g., charging credit card twice).

Configuration example:

apiVersion: networking.istio.io/v1

kind: VirtualService

metadata:

name: customers-service

namespace: default

spec:

hosts:

- customers.default.svc.cluster.local

http:

- route:

- destination:

host: customers.default.svc.cluster.local

subset: v1

retries:

attempts: 3

perTryTimeout: 2s

retryOn: 5xx,connect-failure,reset

Configuration breakdown:

- attempts: 3: Maximum retry attempts (total tries = 1 initial + 3 retries = 4 total)

- perTryTimeout: 2s: Timeout for each individual attempt (2 seconds)

- retryOn: Conditions that trigger retry (comma-separated list)

What this does:

- Attempts request up to 3 additional times after initial failure

- Each attempt has 2-second timeout

- Only retries on specified failure conditions

- Total maximum time: 8 seconds (4 attempts × 2s each)

Retry conditions reference:

| Condition | When It Triggers | Use Case | Example |

|---|---|---|---|

| 5xx | Server errors (500-599) | Backend failures | 500, 503, 504 |

| connect-failure | Cannot establish connection | Network issues | Connection refused |

| reset | Connection reset by peer | Abrupt disconnections | TCP reset |

| gateway-error | Bad gateway responses | Proxy errors | 502, 503, 504 |

| refused-stream | HTTP/2 stream refused | Protocol issues | Stream rejection |

| retriable-4xx | Specific 4xx errors | Client errors | 409 Conflict |

| retriable-status-codes | Custom status codes | Specific codes | 429 Rate Limit |

Important rules:

- Total time constraint: Combined retry time never exceeds main timeout (if configured)

- Exponential backoff: Not shown in example, but recommended for production

- Retry budget: Consider limiting retries to prevent retry storms